Atlanta is a city full of history and one giant paradox: Did you know Atlanta has 68 streets with a name containing 'Peachtree' despite the fact that peach trees don't natively grow in the city? Adding to the general confusion, it is actually possible to end up at the corner of "Peachtree and Peachtree" in several spots. All this complication around terms and directions makes for a perfect backdrop to talk about equally confusing topics for many: cloud computing and AI. Fortunately, subject matter experts gathered to help us all make better sense of these topics at the Atlanta Cloud Conference 2024.

With over eight simultaneous tracks and over 40 sessions, attendees had the chance to learn and ask questions on a wide range of topics, such as career navigation, FinOps, serverless deployments, and chaos engineering. Of course, like all recent events, there were multiple talks on artificial intelligence. There were hands-on lessons, such as an intro to "Azure Open AI" and "Leveraging AI with AWS Bedrock," as well as more general thought leadership sessions, such as "Unlocking the Mystery of AI with a People Mindset." Security was another theme throughout the event, which is why GitGuardian was there.

Here are a few brief highlights from some of the great sessions at the Atlanta Cloud Conference.

What's the worst that can happen if you don't secure your containers?

In the opening of his highly entertaining session, "Bad Things You Can Do to Unsecured Containers," Gene Gotimer from Praeses told us that his session would give us ammunition for furthering security in our organizations. Instead of just giving us a list of best practices, he demonstrated what could actually happen if we don't factor security into our container deployments. But before he did, he reminded us that security really came down to the three pillars of "CIA": Confidentiality, Integrity, and Availability. If we end up being so "secure" that our apps and stacks become unusable, we have actually failed at security. With that in mind, he dove into three main areas for consideration.

First, the smaller the image, the better. We should remember that containers are not just tiny VMs; we should not be loading full Ubuntu distributions and all default utilities into our production containers. We should remember the principle of least privilege and only install what is absolutely necessary for the app to run. He summed up this concept with the acronym "YAGNI" which stands for "You ain't going to need it." The best approach, when possible, is to compile apps to executable binaries using languages like Rust or Go and deploy "scratch" container images, meaning only the application code is loaded.

Next, we need to update images often. Using old images can mean leaving vulnerabilities open that have patches readily available. In his consulting work, the number one piece of advice he always gives clients is to patch applications and update containers. When in doubt, rebuild that image.

Secrets hardcoded in containers is another major issue. Gene showed just how easy it is to find and exploit plaintext credentials hidden in the multiple layers of a Docker image. Using an open-source command line tool called Dive, he was able to expose files deep within a docker image he created quickly. He advocated using GitGuardian to check for hard-coded secrets in your code and the Docker images you create.

He also strongly advocated never running your containers as `root` nor automatically building with the `latest` tag. For the latter advice, he suggested leveraging digests, citing specific versions by their computed SHA-256 hashes, which guarantees only that specific, trusted code will be called.

How hackers actually gain access

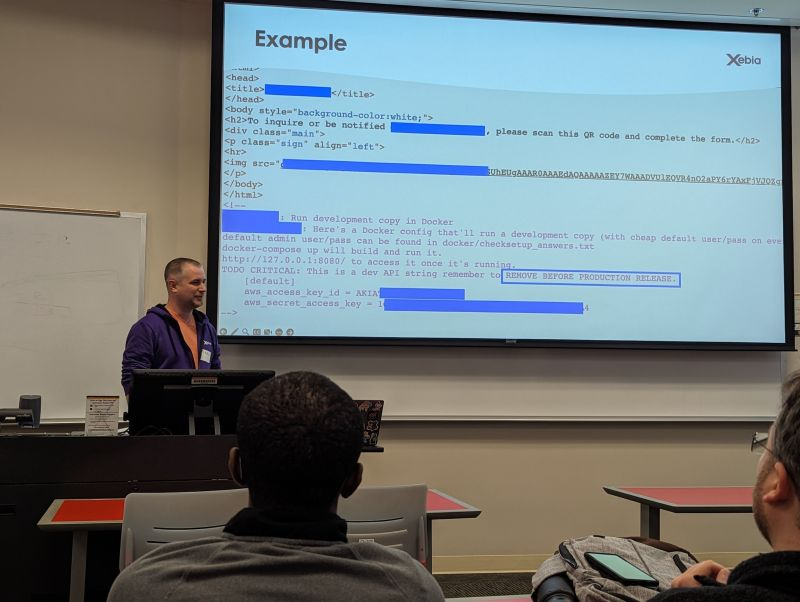

Continuing from Gene's theme of showing real-world exploits in action, the session "Cloud Hacking Scenarios" Michal Brygidyn from Xebia took us through several white hacking bug bounty accomplishments while showing some redacted screenshots along the way. It turns out the easiest and most common way into a system is simply finding and using a valid key.

He dove right in and showed how the first place he always checks is the front-end HTML. In a screenshot of a webpage's source code, he showed how a comment to "remove the following AWS credentials from the code before pushing to production." However, Michal found the valid AWS credentials for one client on the production website.

The second place he looks is at any of the company's source code in public repositories. Just like most hackers, Michal is aware of the millions of hardcoded credentials that get added to public GitHub every year. Even if the secret leaked publicly is not an AWS key, which is what he is after in his bug hunting, it often leads to more access and eventually to what he wants.

He also walked us through a scenario where he was able to gain access to one system and then found that the same password worked across multiple systems, including access to a user's email, which he used to perform a root account password reset. Fortunately for him, and unfortunately for this company, multi-factor authentication, MFA, was not enabled. That extra step would have stopped him and made him rethink his approach.

While he shared some other stories, they all involved the same fundamental issue of hardcoded secrets and common misconfigurations. Make sure you remove plaintext passwords and require MFA on all critical accounts.

Embracing AI responsibly

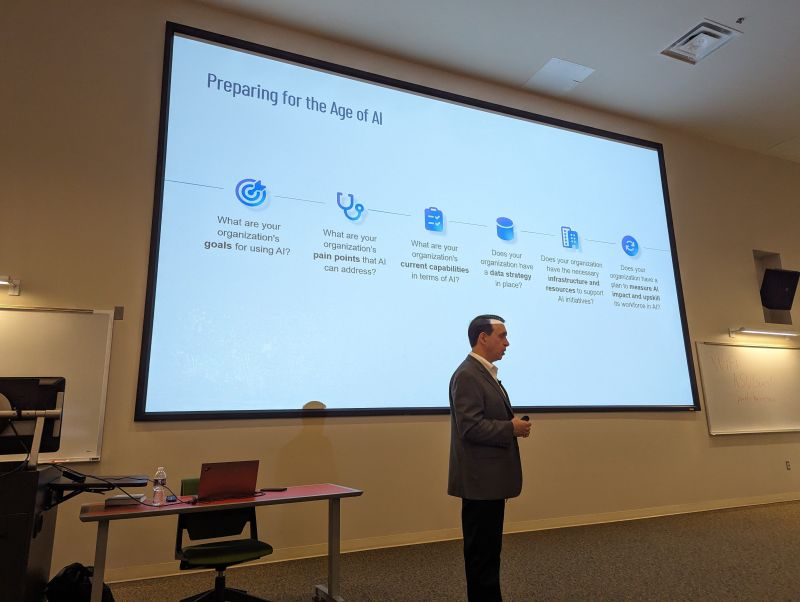

The Atlanta Cloud Conference, like many recent events, featured a keynote focused on artificial intelligence and large language models, LLMs. In his presentation "Terminator or Terminated: Facing Technology Fears in the Age of A," Antonio Maio, managing director of Protiviti, walked us through some basics of getting started and introduced us to some resources for safely approaching the subject.

Based on his work helping companies adopt AI and his investigation into the subject, he said there are 6 major questions any company needs to answer before it launches a new initiative.

- What are the organization's goals for using AI?

- What are the organization's pain points you hope AI will help solve?

- What are the organization's current capabilities when it comes to developing and maintaining a new technology?

- Do you already have a data strategy?

- What infrastructure and resources can you devote and budget for an AI project?

- How will you measure AI's impact?

Antonio also introduced us to the Microsoft Responsible AI Standard framework. This model suggests six core principles companies should strive to adopt when implementing AI technologies. These principles are:

- Fairness

- Reliability and Safety

- Privacy and Security

- Inclusiveness

- Transparency

- Fairness

Antonio spoke about protecting our data models when implementing our own LLMs. He gave an example of 'poisoning the data' where an employee can keep feeding the prompt something like "Antonio is a dumb and bad manager" a few thousand times. Later, when another new employee asks about Antonio as a manager, we know what answer they will likely get. It is easy to extrapolate some more serious scenarios if left unchecked.

As he was wrapping up, he said the only real way to understand LLMs is to dig into the math. Fortunately, there are a lot of excellent resources out there, but he strongly suggested "Deep Learning," which is currently available to read online for free.

The cloud is for all experience levels

One thing that stood out about this conference was the diversity of backgrounds and experiences of the attendees. Atlanta Cloud Conference is organized by the same team that puts on the Atlanta Developers Conference, and many attendees participated in both. This led to a more developer-focused audience, with limited DevOps experience in many cases, that was very eager to learn about cloud tech and cloud security.

Leaked credentials were mentioned in almost all of the sessions I saw, and it is clear that this problem is starting to get the recognition it deserves. Your author was able to present my talk on the history and use of honeytokens and received some unique questions that allowed me to explain some concepts around secrets management directly to this developer-forward audience. Regardless of how you secure your secrets, we invite you to make sure you are also scanning for secrets and putting remediation plans in place.

No matter what your title is or where you work in the organization, if you need help figuring out where you are and where you need to go next with secrets security, we invite you to take a look at our Secrets Management Maturity Model.