What Exactly is Docker?

Docker is a synonym for container technology, much like "Hoover" has become synonymous with vacuum cleaners in the UK. Docker turned the concept of containers into a ubiquitous tool for modern application deployment. However, most of us talk about Docker without truly understanding its fundamentals. This post aims to demystify Docker, explain some of its inner workings, and showcase how you can optimize Docker images for more efficient usage.

Docker Containers: Spicy Tar Files with Metadata

At its core, a Docker container is nothing more than a glorified tar file, or as I like to call it, a "spicy tar file." These tar files are just regular archives with a bit of extra metadata that Docker uses to manage and deploy applications. Here's the basic anatomy of a Docker image:

- Layers: Each layer is essentially a tar file containing the files added or modified in that particular layer.

- Config: A JSON file that describes how the image should be run—environment variables, entry points, etc.

- Manifest: Ties everything together, specifying which layers belong to the image and how they should be used.

Let me illustrate this with an example. Consider a basic Dockerfile:

FROM alpine:latest

COPY hello_world.txt /hello_world.txt

Building and running this Dockerfile involves creating layers and adding files. What's stored in each layer is essentially a tarball of the contents at that state.

Under the Hood: How Docker Pulls and Runs Containers

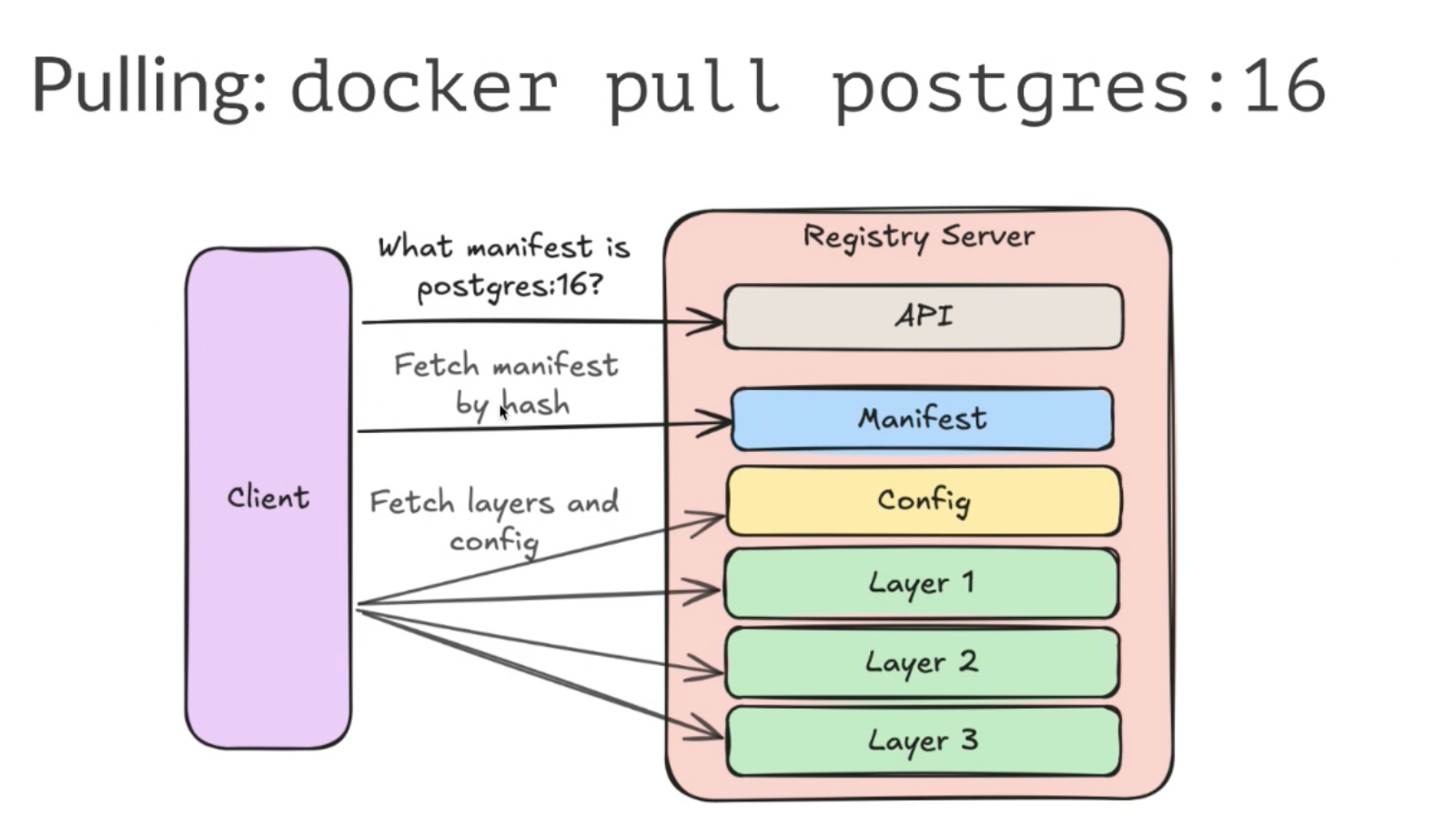

When you run docker pull postgres:16, Docker interacts with a registry server (e.g., Docker Hub) to fetch the manifest, then downloads each specified layer sequentially. Here's a succinct overview:

- Pulling: Docker fetches the manifest and all associated layers.

- Layers: Each layer is identified by a SHA-256 hash and downloaded if not already present.

- Union File Systems: Layers are merged using union file systems, making it appear as though all files reside in a single directory.

- cgroups (control groups): This Linux kernel feature isolates processes, ensuring the containerized process only interacts with its specific environment, mimicking a virtual machine without the overhead.

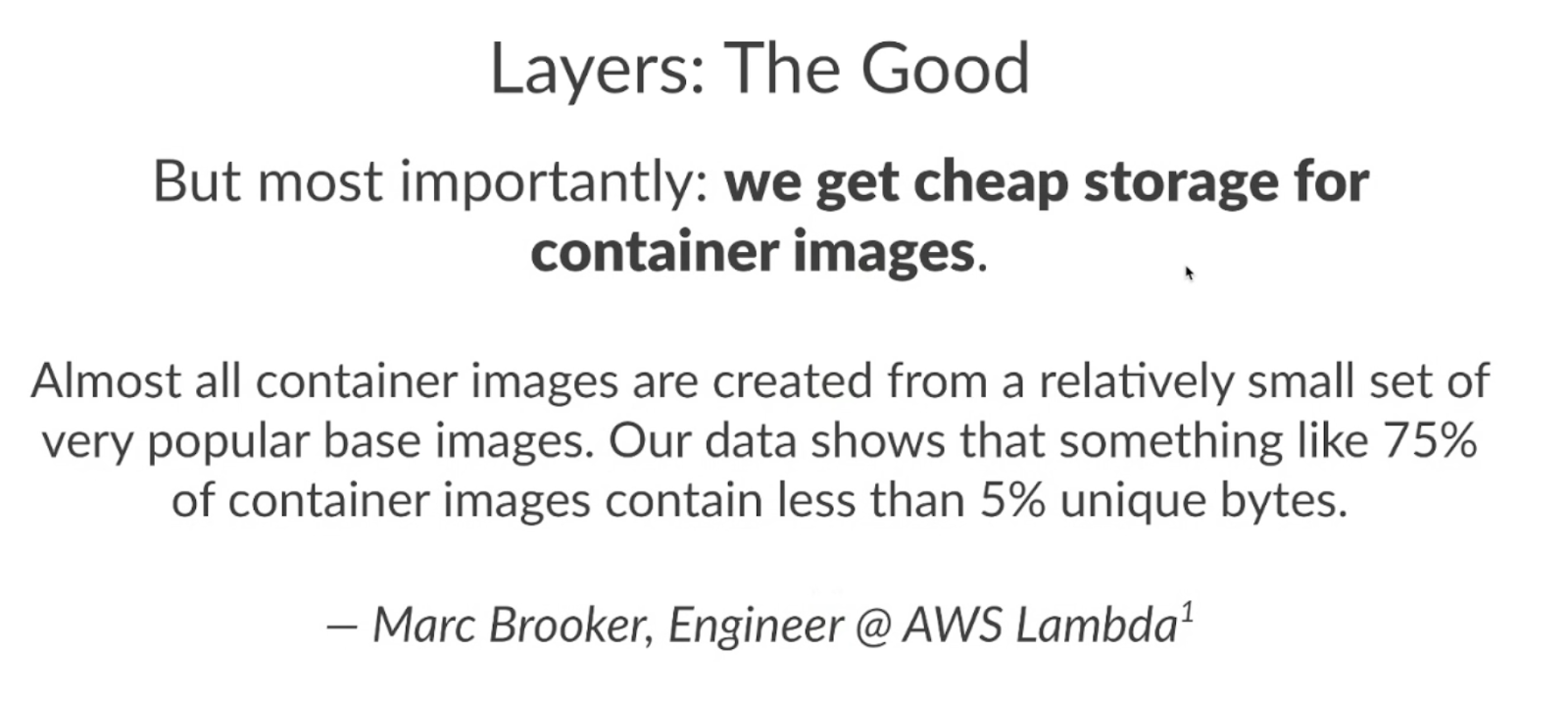

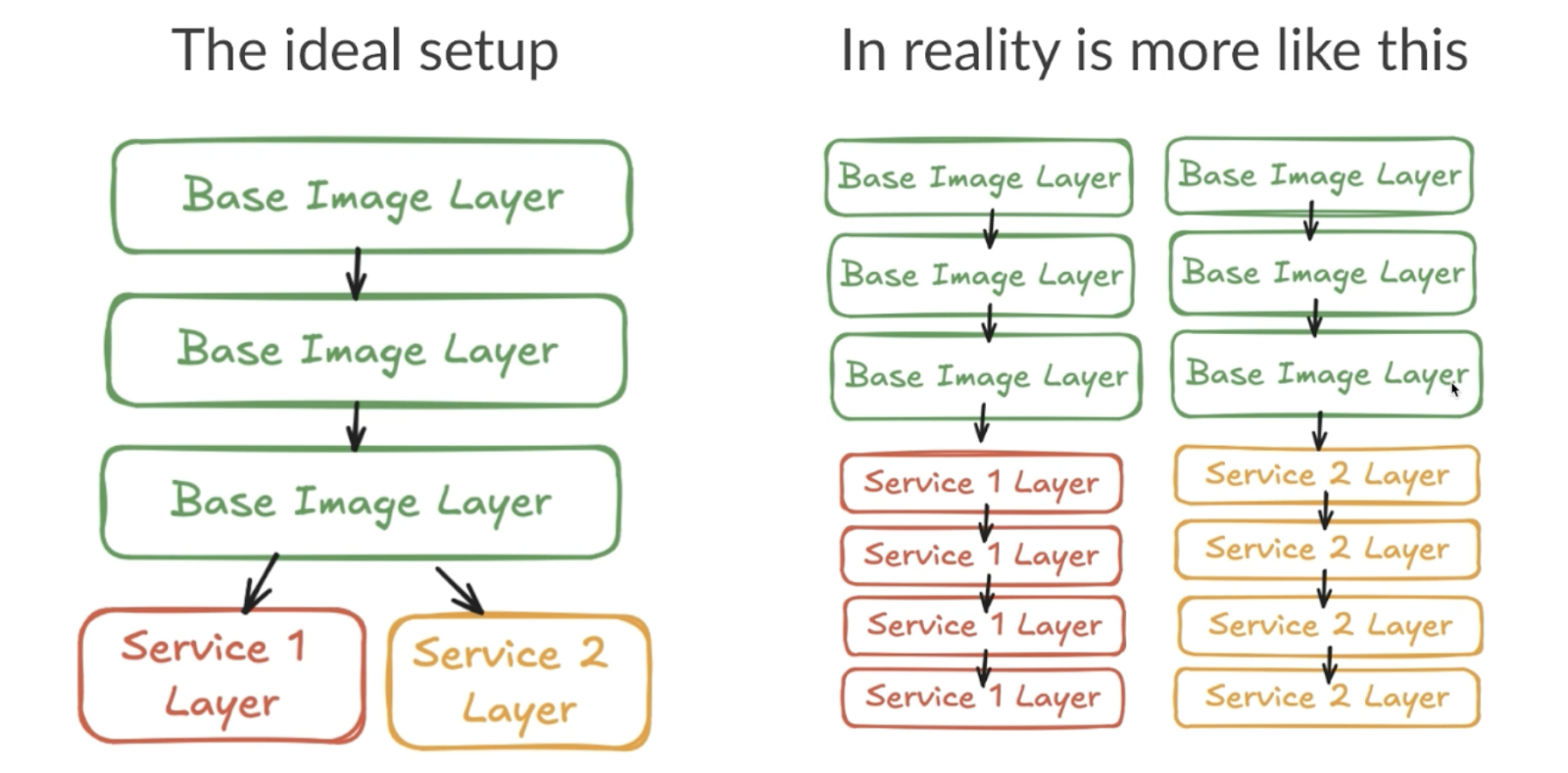

The Good: Layers Promote Efficiency

Layers bring several advantages:

- Speed: Docker caches layers to avoid redundant processing during builds.

- Immutability: Each layer is hash-verified, offering an inherent level of security.

- Storage: Only unique data is stored and reused across different images and containers, a significant cost-saving for large-scale operations.

An AWS Lambda example illustrates this well—75% of container images share less than 5% unique bytes, thanks to layers.

The Bad and the Ugly: Room for Improvement

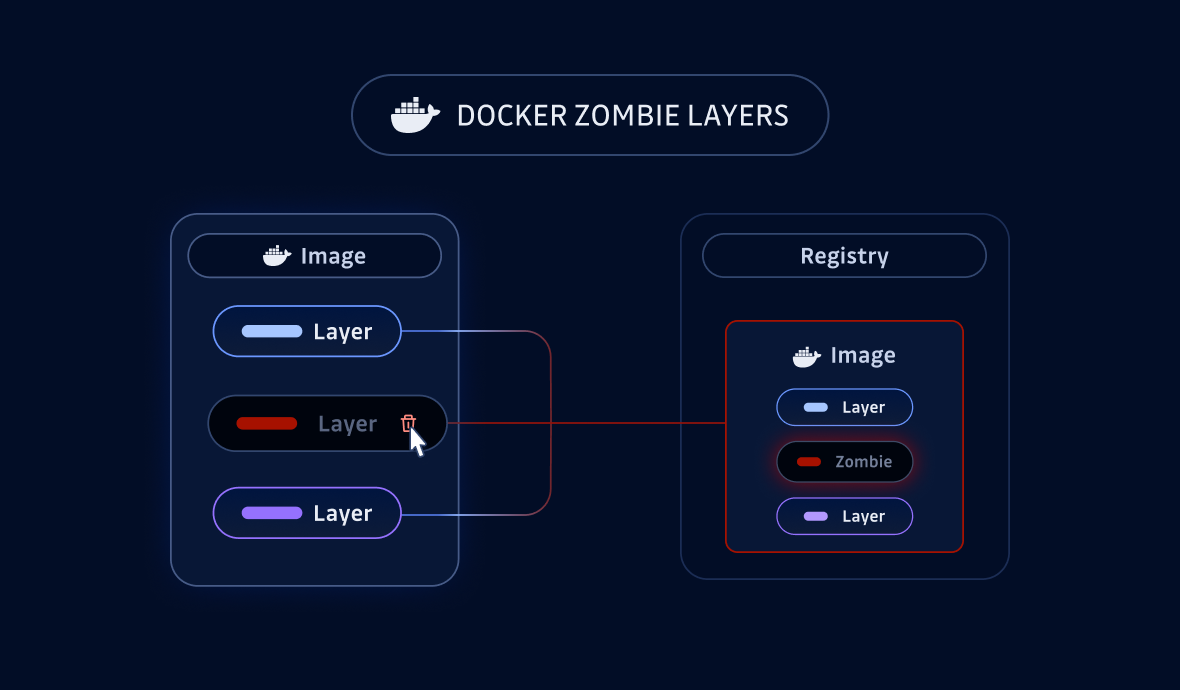

However, Docker's approach isn't without drawbacks, especially when it comes to:

- Sequential Downloads: Layers are downloaded one-by-one, significantly slowing deployment.

- Redundant Data: Deleted or replaced files from old layers still exist, wasting space.

- Compression Inefficiency: Docker uses gzip, which is slower and less efficient than newer algorithms like ZStandard.

Optimizing Docker: Repacking for Speed

Given these limitations, how can we optimize Docker images for faster pulls and smaller sizes? Enter Docker Repack.

Key Strategies:

- Reduce Redundant Data: Strip out unnecessary files.

- Parallel Downloads: Split large layers into smaller chunks to parallelize downloads.

- Better Compression: Use ZStandard for smaller, faster decompressing images.

Results:From benchmarking various images, we've observed up to 5x improvements in pull times. For example, an NVIDIA image that took 30 seconds to pull could be optimized to pull in just 6 seconds.

Real-World Application: Docker Repack

I've developed a tool, Docker Repack, that implements these optimizations. The process involves:

- Repacking large layers into smaller ones.

- Removing redundant files.

- Applying ZStandard compression.

Layer Analysis: It analyzes the original Docker image's layer structure and content distribution, and removes any redundant content (i.e. files in earlier layers that have been removed or overwritten by later layers)

Layer Reordering: It reorders layers by the contents to maximize compression opportunities. Similar file types are placed together, which greatly improves compression ratios, and tiny files and directories are placed into the first layer to allow the container runtime to quickly unpack them first.

Content Deduplication: It identifies duplicate content across all layers and removes the redundant, duplicate data

Layer Compression: It applies Zstd compression to reduce the overall image size compared to the default gzip compression method Docker uses

Conclusion: Repacking Docker for Efficiency

The insights gained here suggest that layer repacking provides substantial benefits, especially in production environments where deployment speed is critical. These optimizations don't require changing your entire CI/CD pipeline—only simple additional steps post-build.

This exploration not only demystifies Docker but also provides practical steps to make your container management more efficient.

Stay tuned for more updates as we continuously refine and enhance these tools for better performance and utility in the Docker ecosystem.

Likes this article?

Read more about Docker security and optimization: