Before Atlanta was named Atlanta, it was called "Termius". That first name is derived from the Western & Atlantic Railroad lines terminating there. Later, the town was called Thrasherville, Marthasville, and eventually renamed Atlanta, the feminine version of the word "Atlantic." That railroad‑endpoint history feels oddly fitting for a conference that marked a new departure point for cloud‑native security in the age of AI: KubeCon + CloudNativeCon North America 2025.

This year's KubeCon marked the 10th anniversary of the Cloud Native Computing and the beginning of its organization of this Kubernetes-focused event. There is no way to capture everything that happened and all the lessons learned in Atlanta this year, but here are just a few key highlights and takeaways from KubeCon 2025.

Thank You To The CNCF

Before we continue with the overview, let's give thanks and say "Happy Birthday" to the Cloud Native Computing Foundation (CNCF). This Linux Foundation project plays a foundational role in stewarding open source projects. For many projects, this group maintains the connective tissue of modern digital infrastructure. For individuals and companies alike, the CNCF is more than a neutral home for technologies like Kubernetes, Envoy, and SPIFFE. They are a trust anchor, providing neutral governance, community-driven development, and rigorous graduation criteria to ensure that critical tools evolve transparently, securely, and with long-term reliability in mind.

This stable future matters deeply to everyone building on these tools, from the solo developer releasing projects to Fortune 50 enterprises running critical infrastructure. It transforms fragmented, fragile toolchains into dependable, interoperable ecosystems, making it possible to innovate on top of shared infrastructure without fear that core dependencies will disappear or stagnate.

In a world where operational risk increasingly stems from opaque supply chains and software monocultures, the CNCF provides an essential counterbalance: open, collaborative, and resilient by design.

Nested Trust Domains in Zero Trust Environments

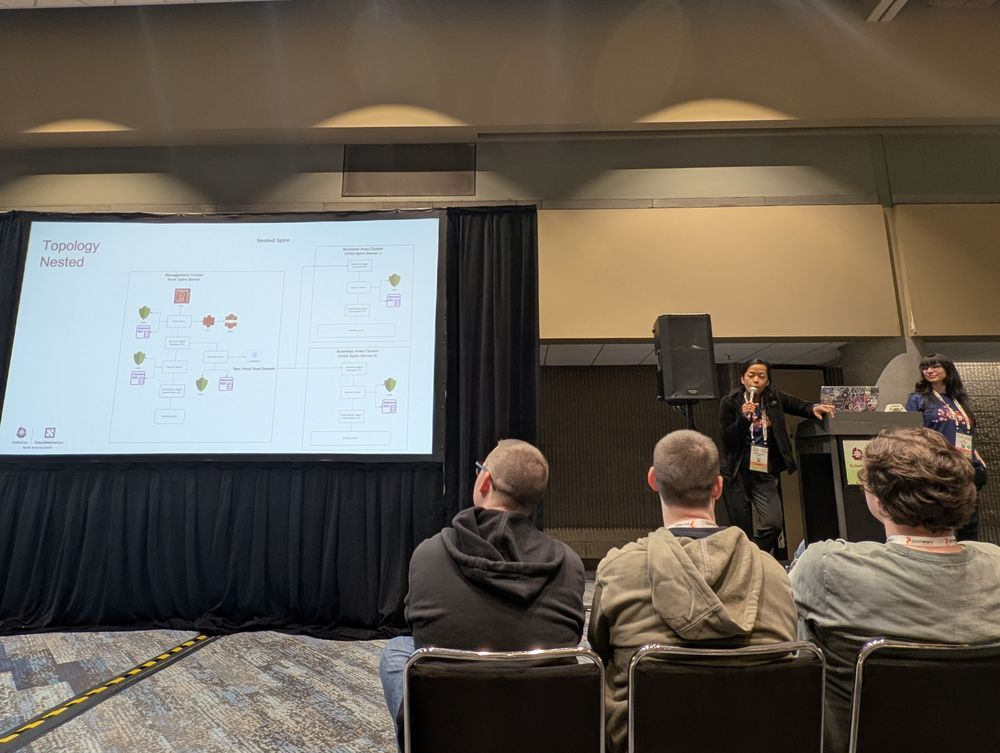

In their joint session titled “From Bespoke to Bulletproof: SPIFFE/SPIRE with ESO for Enterprise Zero Trust,” May Large, Lead Infrastructure Engineer, and Ivy Alkhaz, Lead Infrastructure Engineer, both at State Farm, covered how the enterprise is shifting from ad hoc secrets and identity boundaries into structured federated trust domains.

The implementation they described layered a root SPIRE server in a management cluster, with intermediate CAs per child business‐area cluster. This "Mom/daughter" setup, as they described it, used an ESO (External Secrets Operator) to manage cross‑account secrets. They showed how they hardened their Helm charts, addressed scale issues, including namespace cache limits, named port constraints, and cross‑account IAM policies. They found success by adopting a gradual cutover, allowing certificate renewals to naturally shift workloads across active/passive regions.

They said teams can integrate with language libraries, sidecars, or a lightweight SPIFFE helper daemon when apps cannot be touched. Today, this is focused on Kubernetes, but there is no real reason to stop there. Next steps include stronger exemplars and roadshows, and pushing into service mesh and federation so more trust domains can share this same, predictable backbone. And they said one of their favorite parts was when asked for a cookbook or deeper reading, they can simply point to the official SPIFFE book.

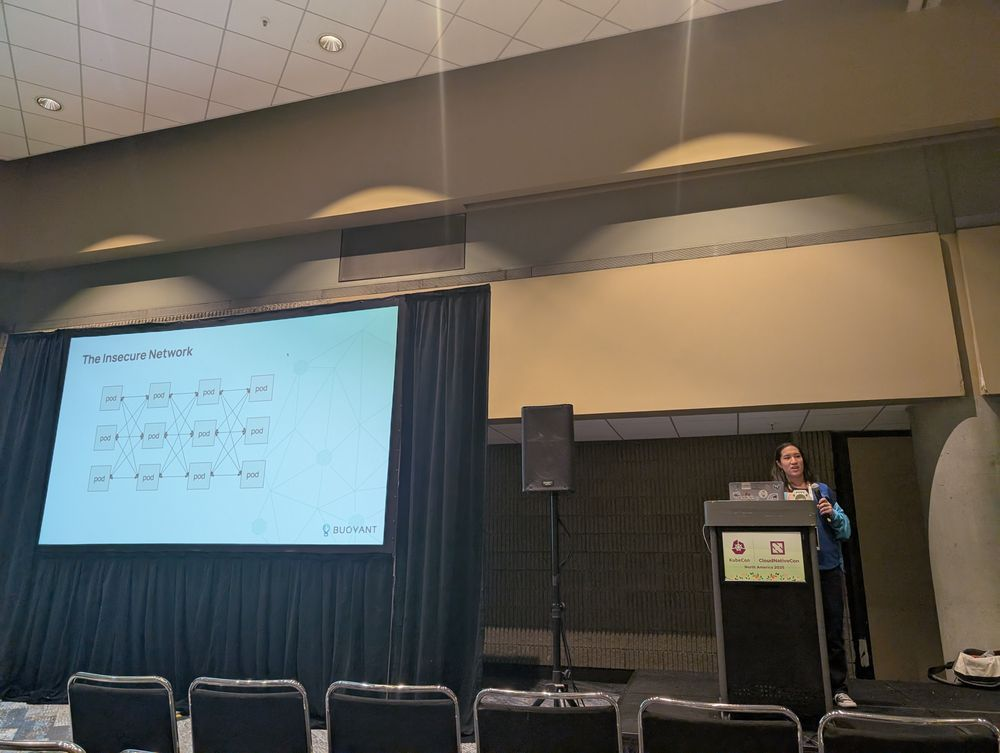

When the Network Itself Can’t Be Trusted

In “It’s 2025; Why Are You OK With an Insecure Network? 🤯” Alex Leong, Software Engineer at Buoyant, explained that even in a Kubernetes‑driven world, the network remains a low‑trust perimeter. We cannot rely on IP addresses or network isolation alone. She covered confidentiality, integrity, authentication, and authorization as key attributes of a networked workload architecture. At the heart of it, TLS and mTLS need anchor identities at the workload level rather than IP or namespace.

Kubernetes gives you pieces like NetworkPolicy, IPSec, WireGuard, and CNI plugins, and service meshes like Linkerd or Istio can push mutual TLS into the runtime so identity travels with each connection. She explained that IP‐based authentication via Kubernetes NetworkPolicy may suffer from eventual consistency, cache staleness, and IP reuse, and thus doesn’t guarantee that an identity is not adversarial.

The alternative Alex pushed toward is workload identity as the core primitive. Instead of trusting an IP, you bind each pod to a Kubernetes service account and surface that identity in mTLS certificates managed by a service mesh sidecar or ambient proxy. Both sides present certs, both sides verify, and authorization policies can say “this service account can talk to that one,” which is much clearer than juggling CIDR blocks. You can check out the Istio project for further research into service meshes.

Centralized IAM Meets Cloud‑Native Database Securityattendees is that PostgreSQL

In the session “Modern PostgreSQL Authorization with Keycloak: Cloud Native Identity Meets Database Security,” Yoshiyuki Tabata, Senior OSS Consultant, and Gabriele Bartolini, VP of Chief Architect of Kubernetes at EDB, showed how they integrated the open‑source IAM solution Keycloak with a Kubernetes‑native PostgreSQL operator, called CloudNativePG. Database authentication and authorization are delegated to OAuth2 flows and tokens rather than static database passwords or LDAP alone.

The big news for many attendees is that PostgreSQL 18 will natively support OAuth. From an identity and access management perspective, Keycloak can become the policy decision point. In this model, the client first talks to Keycloak to get an OAuth token, then connects to PostgreSQL and selects OAuth as the authentication method. The validator checks the token, decides which role applies, and either maps the external identity directly or delegates the authorization decision to an external service.

Their demo walked through CloudNativePG, creating and managing the PostgreSQL cluster and a client using the device flow to obtain an access token before connecting. The end state is a cleaner split, where Keycloak owns who you are and what you can do, and where PostgreSQL focuses on data. CloudNativePG wires it together in a way that fits how people already run databases on Kubernetes.

Scale, Governance, And Rollout In Service‑Mesh AuthN/AuthZ

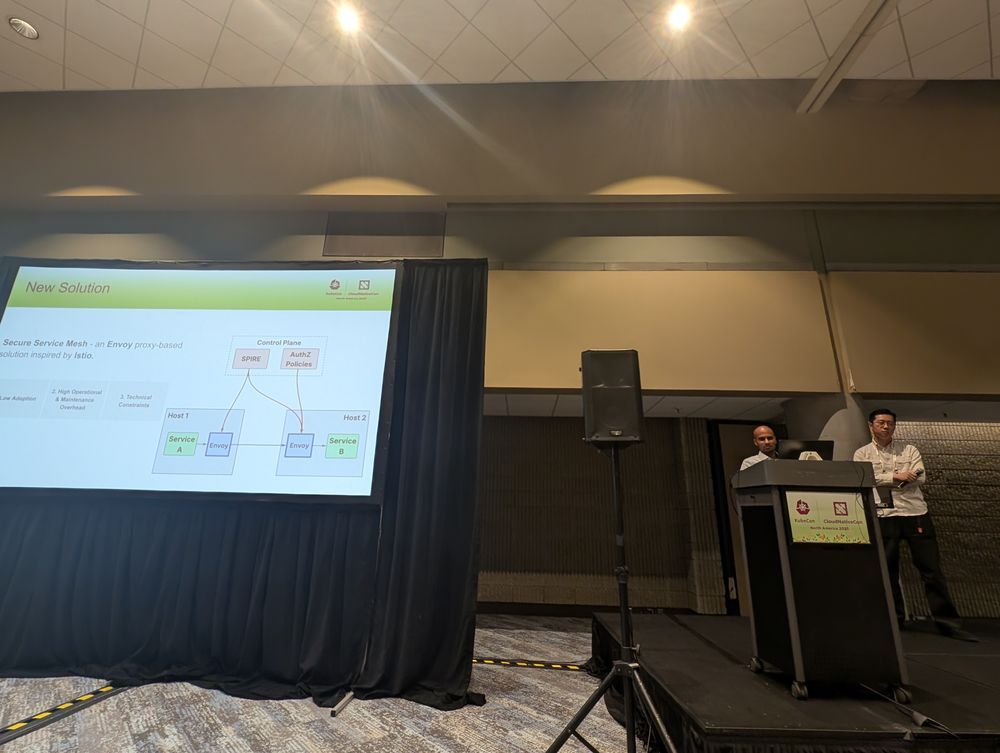

In the session “Authenticating and Authorizing Every Connection at Uber,” Yangmin Zhu, Staff Engineer, and Matt Mathew, Sr. Staff Engineer, both at Uber, described how they retrofitted a massive microservices estate with thousands of services to use a unified identity via a sidecar proxy model.

Engineering teams had previously tried a library-based approach, but watched that stall at about 10% adoption because it required thousands of engineers to update code across many languages. Operationally, upgrades and fixes were slow and brittle, and keeping behavior consistent across stacks was difficult. That combination pushed them to rethink the strategy around a service mesh model using Envoy, inspired by Istio, where applications stay mostly unaware and the proxy takes on identity and policy enforcement.

The duo explained that now, each service container gets a unique integer “class_ID” on outbound TCP connections. Envoy on the node reads that mark, and an Envoy Manager component fetches X.509 certificates from a SPIRE agent using a delegated identity API so connections carry SPIFFE-based identities. On the inbound side, the container scheduler assigns a unique port per service and uses the destination port in the Proxy Protocol to identify the target service via a port lookup.

The technical plumbing only solved half the problem, though. To drive adoption, the team invested heavily in end-to-end observability, a better developer experience, and a gradual rollout strategy. They leaned on Envoy’s RBAC shadow mode to watch real traffic and auto-generate candidate policies, then let teams review and promote them to enforcement, with stricter checks for critical services and more automation for noncritical ones. A “big red button” gave operators the ability to disable the system quickly if it degraded service.

Kubernetes As The AI Substrate

Underneath all the logos and project names, this KubeCon made something clear: Kubernetes is no longer “just” an app platform. It is quietly becoming the default substrate for AI systems, too. Dynamic resource allocation for GPUs, AI conformance programs, and model-serving stacks, sitting alongside traditional workloads, all point in the same direction. Agents, with all their needed training, need the same clusters, schedulers, and observability stacks as any modern application.

The AI attack surface of pipelines, data stores, and agents now rides the same control planes as web apps and batch jobs. We should think about identity, segmentation, and the supply chain for AI artifacts with the same seriousness as we do for our APIs.

Identity All The Way Down

The talks about SPIFFE/SPIRE, service meshes, and fine-grained data access made it clear that from this community's point of view, trust is moving off the network and deeper into identity. IP addresses and static passwords are too blunt for a world of short-lived pods, cross-cloud agents, and GPUs shared across tenants. Workloads, users, and models must start with strong identities. Policies need to focus on who is talking and what they are allowed to touch, not which subnet they came from.

From Security Features to Security Habits

Another encouraging trend across talks is that security seems to be a larger part of the design and consideration for the overall tech stack. As teams drown in CVEs and alerts, they are coming to understand we need safer defaults and workflows that make “the secure option” the path of least resistance.

There was a lot of focus on gradual rollouts, shadow modes, pre-flight checks, and big red buttons. Retrofitting identity and auth into thousands of services, or tightening access to petabytes of data, only works if you can do it incrementally, observe the impact, and back out safely. The challenge is going to be getting adoption on legacy systems, where change is the scariest for teams.

Building For Agents And Quantum

AI agents are pushing identity and authorization into new territory, as they chain tools across clouds and act on behalf of humans with real privileges. Thankfully, there have been teams working on this for quite a while, and new projects like KServe have emerged to help teams deal with the operational realities that LLMs bring.

At the same time, post-quantum cryptography is causing us to rethink encryption. Updates are already starting to appear in TLS stacks long before “Q-day,” when an algorithm can break legacy RSA-based encryption. The reality that larger keys, using advanced math, come with increasing network overhead, is something we are just beginning to discuss seriously.

Fortunately, for these and many more challenges, the CNCF is going to be here to guide us through.

Looking Ahead To Next 10 Years

On the keynote stage at KubeCon 2025, someone made the point that containers were the on-ramp, not the destination. This community has quietly grown into critical infrastructure for how the world now runs software. In the span of a decade, Kubernetes went from a "weird new scheduler" to how we deploy nearly everything. KubeCon went from a niche gathering to a place where people compare notes on how to keep hospitals, banks, and AI systems safe and boringly reliable.

If you were lucky enough to be in Atlanta this year, you probably did not come home with a neat, three-point plan. If you are like your author, you came home with a hastily written list of projects to try, talks to rewatch, and people to follow up with. For a few days, we got to see how other teams are wrestling with the same problems and sharing their answers through open software. We got to see each other as people united in common goals. In a field that moves this fast, that might be the most valuable trust anchor of all.