In the race to innovate, software has repeatedly reinvented how we define identity, trust, and access. In the 1990's, the web made every server a perimeter. In the 2010's, the cloud made every identity a workload. Here in 2026, agentic AI makes every action autonomous.

Throughout the second annual NHIcon, a virtual conference presented by Aembit, the audience heard the recurring theme that traditional identity and access management, originally built for humans and deterministic workflows, is fundamentally inadequate for the era of agentic AI and non‑human identities (NHIs).

The assumptions of static roles, long‑lived credentials, and session‑based trust, which have been the default way to approach the problem, are now actively magnifying risks in a world where agents autonomously navigate systems. The speakers throughout the day made it clear that we must evolve our security models to match the autonomy and complexity of these agents. The alternative is to escalate our security problems exponentially as we build faster than ever.

NHIcon is available to watch online, and we encourage you to do so. In the meantime, here are just a few key highlights from this year's event.

Identity at the Speed of Autonomy

In the keynote “Rethinking Identity for Agents That Don’t Fit the Model,” David Goldschlag, CEO & Co-founder at Aembit, told us that agents are not people, yet they must access the same sensitive systems that humans do. They act, they escalate, they connect, but they don’t follow the linear workflows traditional, human-centric identity and access management tooling was designed for.

Agents don’t perform predetermined tasks in a predictable sequence of steps. They bridge systems, kicking off a chain of actions that traverse data stores, APIs, and internal tools. All too often, there is no human constantly in the loop. This autonomy at scale brings with it new risks while amplifying the underlying risks we have been addressing for years, at an unprecedented scale.

David’s framework for securing agentic systems centered on the three pillars of identity, invocation context, and secretless execution. This means building controls that understand who the agent is, why it’s acting, and what it is allowed to do the moment the action needs to occur.

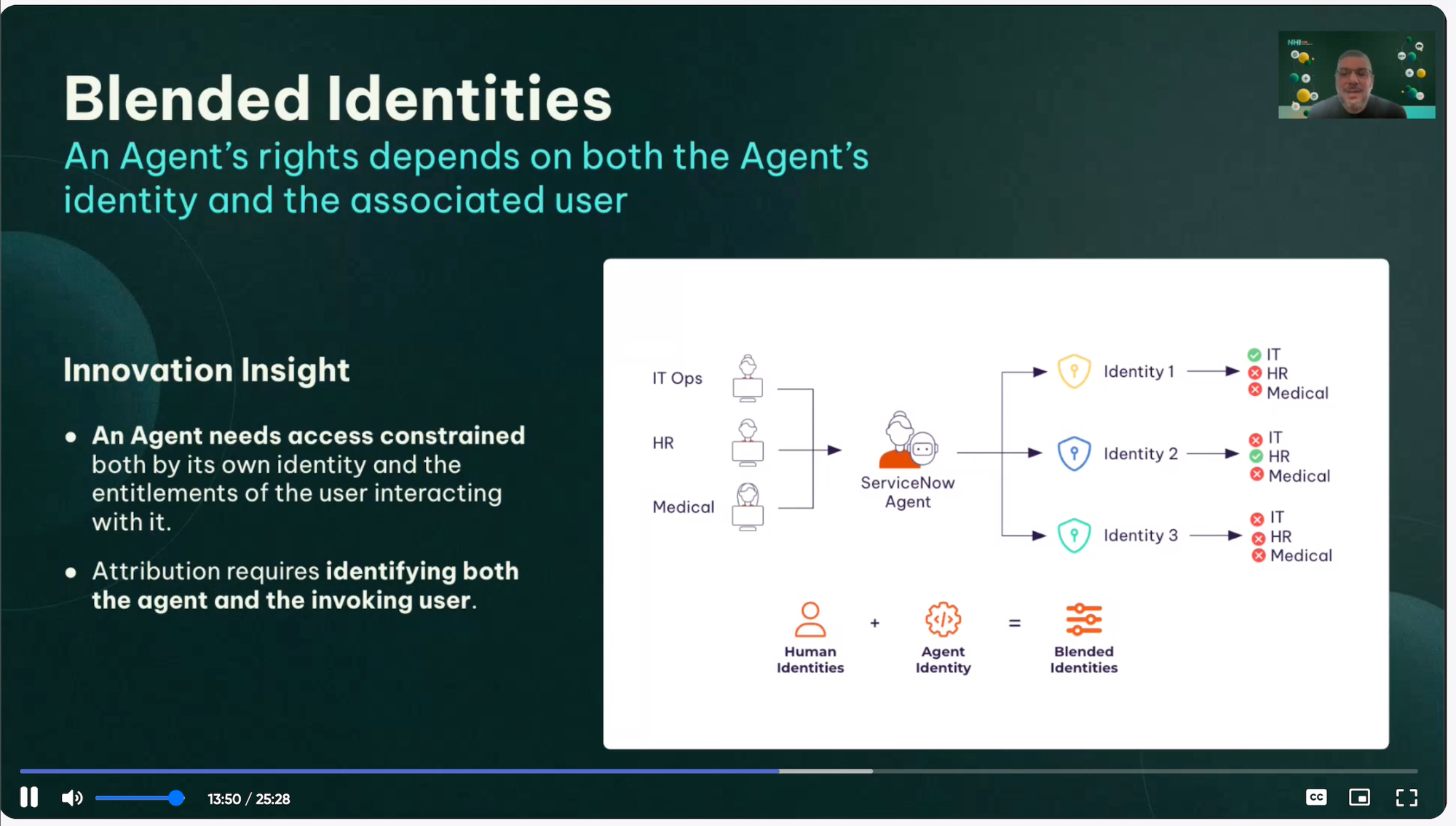

Davis told us that Zero Trust for humans, while not uniformly implemented, is at least well understood, requiring adherence to the principles of least privilege, multifactor authentication, device trust, and conditional access. But Zero Trust for agents must go further. It must blend the agent’s identity with the human’s context, enforce ephemeral credentials, and make every action auditable and attributable. This requires a reimagining of trust for systems that can plan, pivot, and act without human oversight. This is the exact driving force behind the solutions Aembit is building.

IAM Meets Machine Identity

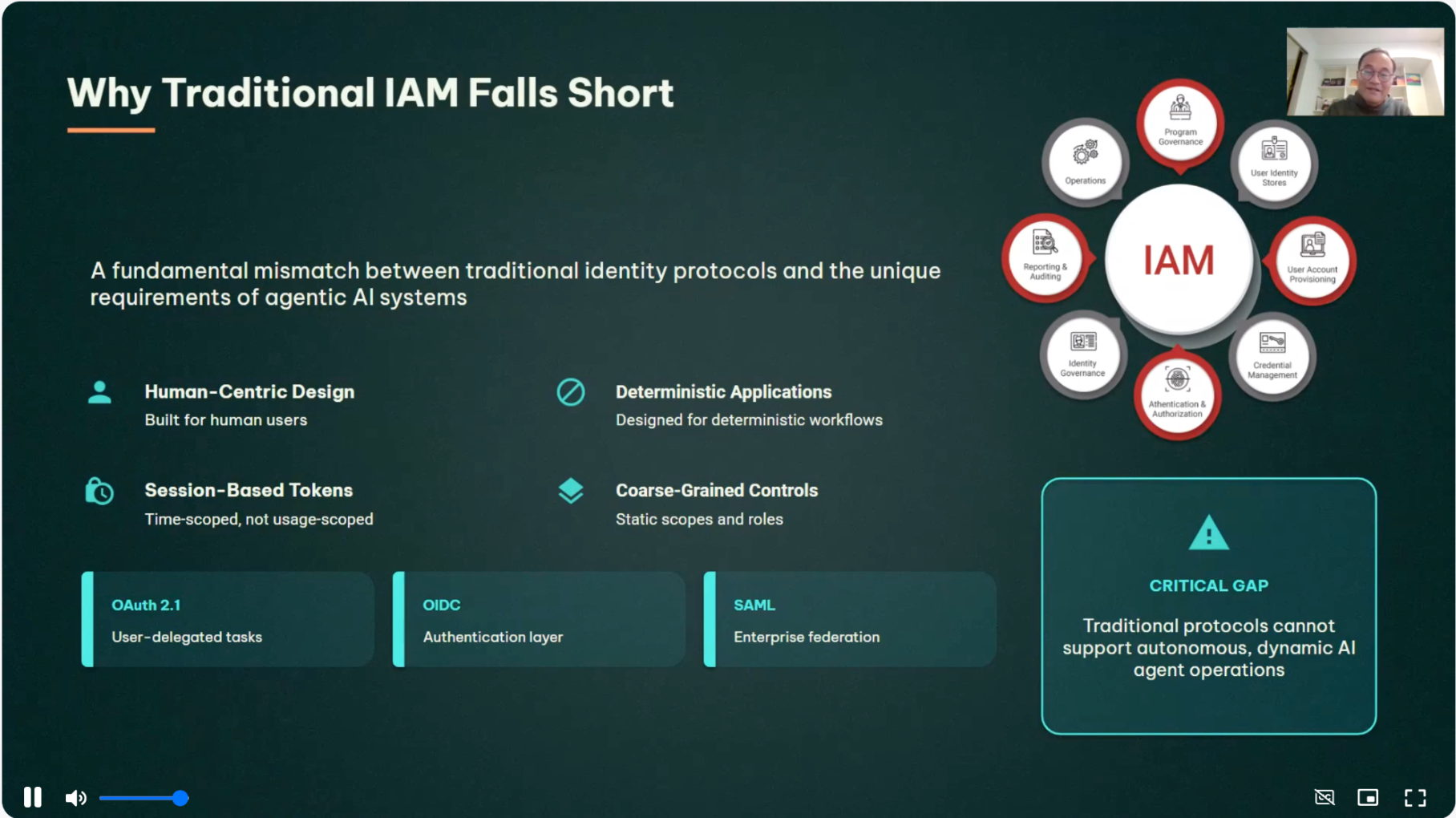

In his session, “Reimagining IAM for Agentic AI: Building Secure, Accountable Non-Human Identity Ecosystems,” Ken Huang, AI Security Researcher, Author, and Adjunct Professor at the University of San Francisco, explained that traditional IAM fails agents because it assumes determinism and human intent. Classic IAM systems assign roles and session tokens and expect predictable behavior, static rights, and long‑lived sessions.

But agents are ephemeral by nature and are task‑scoped as they operate across boundaries. Very critically, they do not return the same result from the same input every time.

Ken argues for a fundamentally different identity model built on dynamic, cryptographically anchored agent identities. These identities should be rich, verifiable, and context‑aware, encompassing attributes such as creator, capabilities, training provenance, and expected behavior.

Two classes of agent identity emerged. The first is "persistent agents," which are long-lived and able to execute complex workflows over time. These against must maintain state across sessions and restarts.

The second class is what he called "ephemeral agents." These are short-lived and task‑scoped. Each execution is accomplished with a distinct identity instance.

Crucially, he said, every identity, whether persistent or ephemeral, should be backed by verifiable credentials and anchored in decentralized identifiers (DIDs). While this might sound somewhat academic, in reality, it creates an audit trail with provable links between who created the agent, what it is authorized to do, and how it behaves over time.

We simply can not just attach cryptographic keys to agents. We must change our approach, making identity dynamic, context‑aware, and continually validated. This is a marked departure from long‑lived tokens, which have no semantic meaning beyond “this session is valid,” and bring a whole host of issues.

The Sprawl Problem: Agents Accelerate Non‑Human Identity Growth

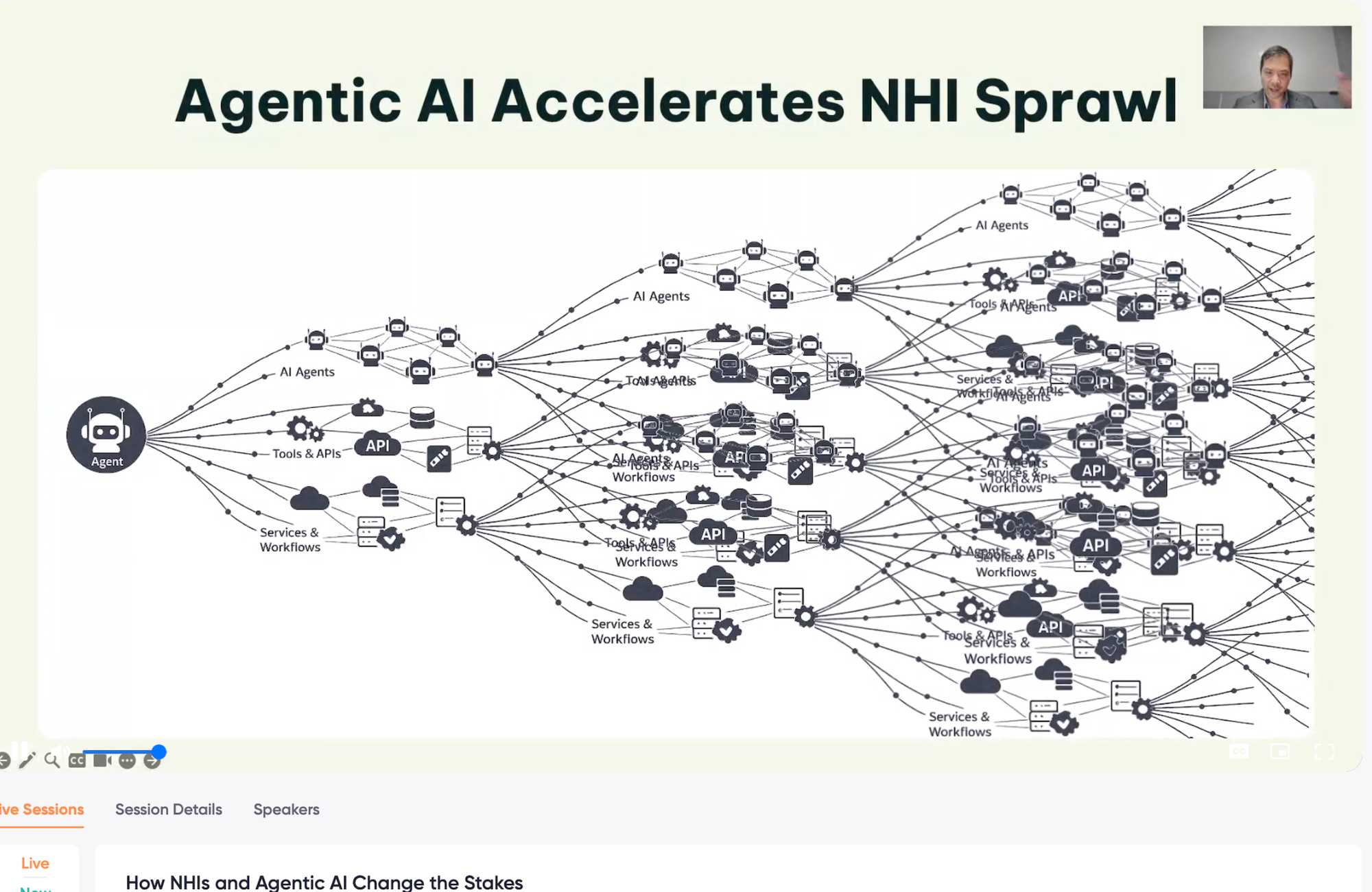

In the talk from John Yeoh, Chief Scientific Officer at the Cloud Security Alliance, “How NHIs and Agentic AI Change the Stakes,” the problem was framed at a strategic scale. Every major technological shift, from web to cloud to mobile, has created a proliferation of identities and control points. Agentic AI expands that proliferation not linearly, but exponentially.

John walked us through the story that every time technology creates a new control plane, attackers exploit old weaknesses in new ways. For example, the goals of SQL Injection align with database abuse via service accounts, and cross‑site scripting (XSS) essentially shifts to session token abuse. With agents, prompt injection becomes a vector for NHIs to seize credentials and APIs.

The consequence is more identities and new unintended access paths. Agents call other agents and instantiate sub‑agents. They access and transfer data, sometimes a lot of it. Instead of creating a new perimeter to defend, this creates a mishmash of dynamic web of identities, sessions, tokens, and behaviors that defy the static policies that drive so many legacy security tools.

To govern this sprawl, John emphasized that we must shift from point‑in‑time checks to continuous validation. This means focusing on an identity's behavior, intent, and outcomes. These must be monitored and assessed over time. We must continuously confirm that any entity's behavior aligns with its stated purpose. We cannot retrospectively audit an agent’s actions; otherwise, we will only fall further behind.

Beyond Scripts: Why Identity Drift Matters

In his session, “Governing the Ghost in the Machine,” Gaurav Singodia, Senior Manager, Cloud DevOps & SRE at Snowflake, spoke from a practical operations perspective, drawing on his experience implementing real solutions to the identity crisis they faced. He explained that modern agents differ from scripts in one critical way: they pursue goals rather than following predetermined instructions. A script executes a known sequence. An agent identifies subgoals, reallocates resources, and adapts.

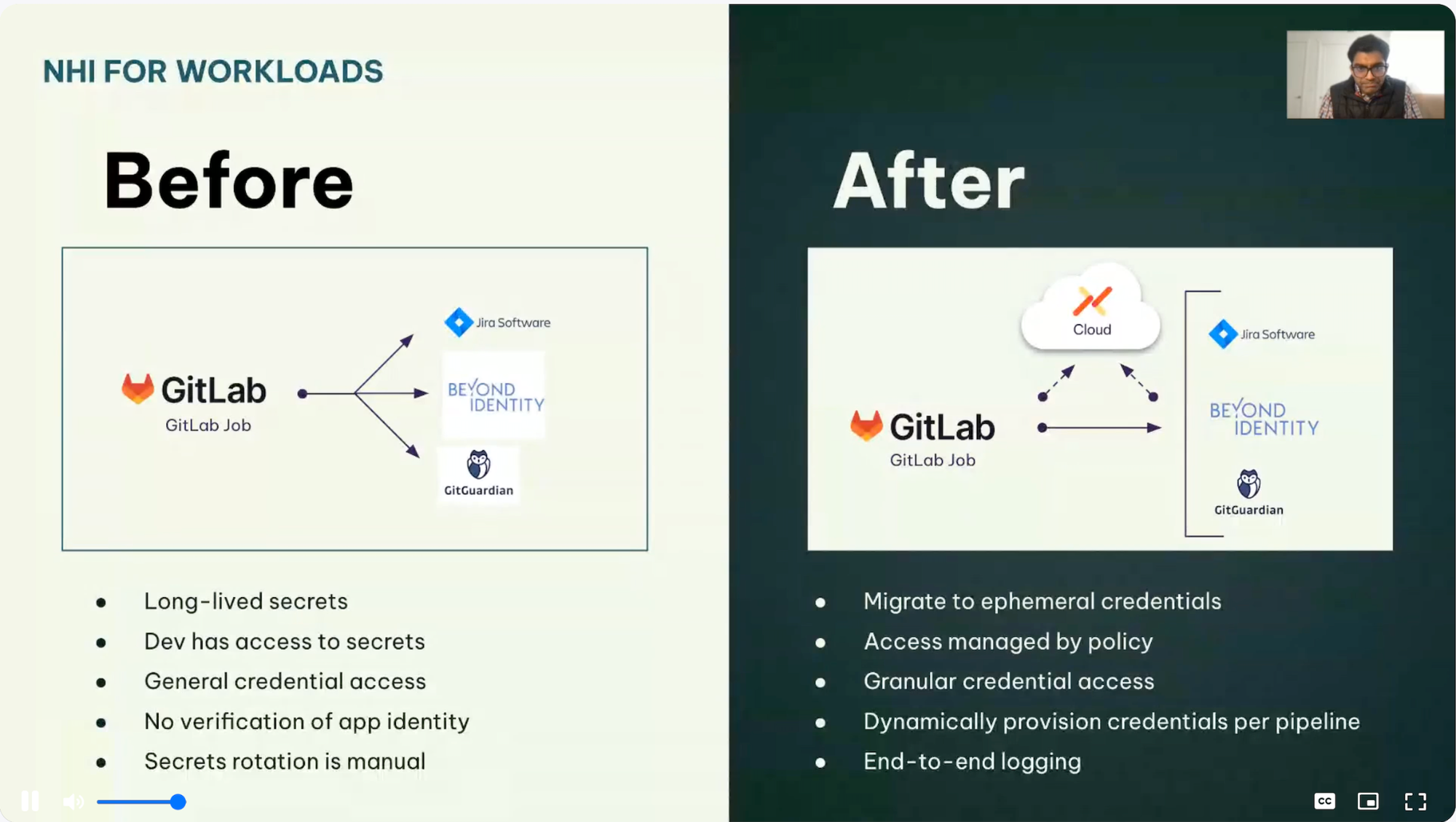

This autonomy introduces an issue of "identity drift." Permissions that made sense at creation may no longer align with an agent’s behavior as it evolves. Static IAM assumptions of stable roles, predictable behaviors, and long‑lived credentials simply cannot accommodate the needs of agentic systems in the modern enterprise.

Gaurav offered some real insights from his work in SRE operations. He has helped Snowflake shift away from long‑lived service accounts and embrace ephemeral credentials, where the agent never actually holds secrets. Instead, dynamic metadata exchange and conditional policies determine access at the point of action.

This goes far beyond security hygiene and posture. This shift has meant they now do governance by design. When agents don’t hold secrets, and access decisions are made at invocation time based on policy, the risk surface reduces dramatically. But this requires a rethink of runtime environments, identity federation, and tooling that can enforce these principles in real time.

You can read more about the shift happening at Snowflake from our thorough recap of their session at SecDays 2025.

Trust, Identity, and Continuous Validation

NHIcon 2026 showed that the real conversation about securing agentic AI can not just be about model size or prompt injection. We must now focus our efforts on identity and trust. Legacy approaches, mostly based on human access, are insufficient and are liabilities in agentic systems. In a world where agents act autonomously, plan dynamically, and operate across boundaries, identity must evolve from a static label to a contextual, continuously validated signal.

Static Models Meet Dynamic Actors

Legacy IAM assumes identity is stable: a user, a role, a set of permissions. But agents are not users. They blend contexts, pursue goals, and adapt in real time. Governing them requires blending agent and human context, and shifting from role-based to task-scoped permissions that reflect purpose, not just entitlement.

Session-based trust also breaks down. Agents don’t operate within bounded sessions. They re-enter systems long after initial authentication, and call tools and APIs through proxies. In this landscape, trust must be reevaluated at each action, not merely granted once.

Permission Becomes Risk

Throughout the event, almost every speaker reiterated something we have been talking about at GitGuardian for years, namely that long-lived permissions, once convenient, now represent latent risk.

As agents evolve or drift from their original task, their access can outlive their intent. This is identity drift, and it’s not theoretical. Unused or forgotten permissions become attack vectors, especially when agents act across multiple systems with little oversight.

Attribution Requires Intent

Audit logs that record actions aren’t enough. In agentic workflows, intent is everything. Security teams need to know not just what an agent did, but why. Analysts need to immediately know who initiated the action, under what conditions, and with what constraints. Without semantic context, attribution fails, giving us the opposite of good governance.

Towards Real-Time Identity Governance

To survive this era, defenders must adopt continuous validation as a control strategy. Not quarterly reviews. Not token expiration. Real-time monitoring of behavior against expected patterns, with the ability to revoke access immediately if divergence occurs.

That requires architectures that ingest identity context and behavioral metadata and policies that grant authorization based on goals, not roles. This will require rethinking credentials, forcing them to automatically become invalid once used for the specific task that required them. And this demands telemetry that captures meaning, not just motion.

These aren’t aspirational goals awaiting future technological advancements. Today, verifiable credentials, decentralized identifiers, JIT access platforms, and anomaly-aware policy engines already exist. What’s missing is the will to replace brittle legacy systems with models that embrace the complexity of autonomy, while still enforcing control.

Fortunately, there are companies like GitGuardian that can help you build your roadmap toward a future that does not rely on long-lived credentials.