What do the terms identity, AI, workload, access, SPIFFE, and secrets all have in common? These were the most common words used at CyberArk's Workload Identity Day Zero in Atlanta ahead of KubeCon 2025.

Across an evening full of talks and hallway conversations, the conversation kept coming back to the fact that we have built our infrastructures, tools, and standards around humans, then quietly handed the keys to a fast-multiplying universe of non-human identities (NHIs). However, the evening didn't dwell on what we have gotten wrong, but instead on what we are getting right as we look towards a brighter future of workload identity.

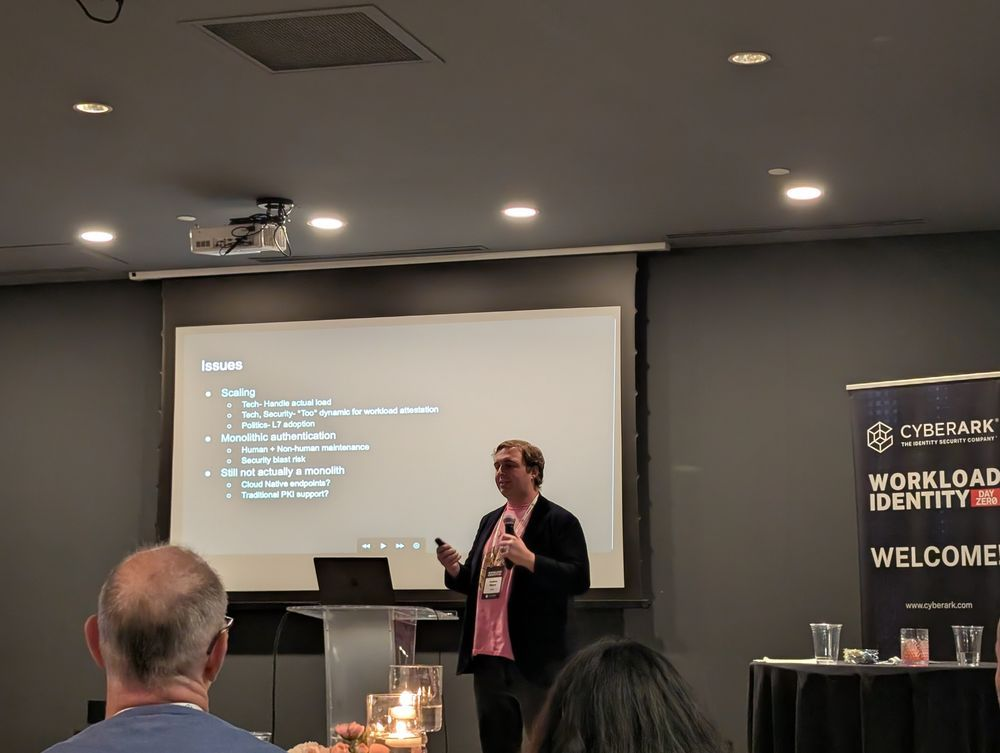

State of Workload Authentication

Every speaker discussed what has happened so far and how we have reached the state in which so many companies find themselves. These workload identities, in the form of services, agents, CI jobs, Lambdas, etc, are mostly authenticated today with long-lived API keys that are more likely than not, overprivileged. We have granted standing access too often. While some teams have embraced PKI setups at scale are trapped in a complexity that only a handful of experts truly understand.

The result is explosive complexity as teams face multi-cloud and hybrid environments, multiple languages, and increasingly complex org charts. It is no wonder that every siloed team has come up with its own ad hoc solutions over the years. But that means it is unlikely that any governance model will be a good fit, preventing us from leveraging a single management system. There is also the fact that if an attacker gets hold of a single NHI credential, they gain a huge, often invisible foothold with a massive blast radius.

At the same time, scale and AI are turning this from “annoying” to “existential” threat. Workloads now spin up, talk to each other, cross trust domains, and die off in seconds, while organizations want billions of attestations per day without hiring an army just to rotate secrets. Now, Agentic AI shows up and starts acting on our behalf. It calls APIs, touches sensitive data, and hops across providers. We now don’t know if a user triggered an action, or an autonomous agent did.

We are beginning to recognize the need for clean attribution, access governance, and logging. Everyone on stage is essentially describing the same issue: we can’t keep handing out magic tokens to non-human actors and hope spreadsheets, YAML, and “best effort” PKI will save us.

Weaving A Shared Workload Identity Story

The opening keynote from Andrew Moore, Staff Software Engineer at Uber, "From Bet to Backbone, Securing Uber with SPIRE," set the tone for the evening. For Uber, all of this work comes down to external customer trust. Their SPIRE journey at Uber really began when they admitted the impossibility of governing “thousands of solutions at scale.”

Andrew's team moved toward a single, SPIFFE-based workload identity fabric that can handle hundreds of thousands to billions of attestations per day. They treat SPIRE as the “bottom turtle.” This means trusted boot, agent validation, centralized signing, tight, well-designed SPIFFE IDs that don’t accumulate junk.

Tying AI To Workloads

Brett Caley, Senior Software Security Engineer at Block, echoed a similar story arc as Uber's in his talk "WIMSE, OAUTH and SPIFFE: A Standards-Based Blueprint for Securing Workloads at Scale." The core question they needed to answer was how to prove a workload "Is who they say they are." Their team went from plaintext keys in Git and bespoke OIDC hacks to “x509 everywhere,” SPIRE-driven attestations. They have rolled out systems that can issue credentials where the workload is, and at the speed developers demand. He explained that they deployed SPIRE “where it should exist.”

Brett also tied all of this to agentic AI and our tendency to anthropomorphize, that is, giving AI agents names in Slack. He said this might invoke Black Mirror storylines, but we need to remember that these 'human-like agents' are all still workloads that need narrowly scoped permissions, explicit authorization of actions, and confirmation of intent.

When something goes wrong, he argues, the right question isn’t “What did the AI do to us?” but “How did our system fail in governing the AI’s workload identity and permissions?”

AI Agents Are Workloads That Need Identities

In their talk "AI agent communication across cloud providers with SPIFFE universal identities," Dan Choi, Senior Product Manager, AWS Cryptography, and Brendan Paul, Sr. Security Solutions Architect from AWS, highlighted that Agentic AIs are comprised of workloads and need to communicate across clouds without ever touching long-lived secrets.

They said if you can establish two-way trust between your authorization servers and your SPIFFE roots of trust, via SPIRE, you can treat SPIFFE Verifiable Identity Documents (SVID) as universal, short-lived identities for AI agents.

From there, they walked through some concrete use cases, including an AI agent acting on behalf of a user. Framed as an “AI-enabled coffee shop” demo, they showed how you start with an authenticated web app, then propagate both user identity and workload identity so the agent can check inventory, update systems, and call tools with clear attribution and least privilege.

They stressed that you don’t need MCP or any particular orchestration framework for this; the pattern is always “get the agent an SVID, then exchange it for scoped cloud credentials,” whether you are interfacing with an S3 bucket or anything else. They closed by telling us that AI agents naturally span trust domains, and SPIFFE gives them a common identity fabric. The future work will refine how we do token proof-of-possession and delegation at scale for all of these non-human workloads.

Where We Go From Here

If these talks are any indication, the next few years are about moving workload identity from heroic projects to boring infrastructure. SPIFFE/SPIRE, WIMSE, OAuth token-exchange patterns, and transaction tokens will quietly become the plumbing. This will define how we deploy CI/CD, microservices, and AI agents securely at scale for the next generation of applications and platforms.

Enterprises are realizing that “API keys in Git” and “service account sprawl” are no longer acceptable risks. The time is now to either adopt internal identity fabrics that attest workloads, issue short-lived credentials, centralize policy, and log everything, enriching those logs with as much context as possible. The UX lesson from all of these talks is that identity is currently painful, and people route around it. If we can do the work now, to make workload identity automatic and invisible, teams will lean into it.

At the same time, agentic AI will force us to sharpen our thinking about representation and blame. We need to ask “What is this workload allowed to do, on whose behalf, and with what guardrails?” The future is building golden paths where every non-human identity, no matter what shape or function, comes pre-wired with a strong, attestable identity and tightly scoped access. The creative tension will be keeping that world flexible enough for experimentation, and safe enough that “exploding rockets” stay in test environments, not production.

No matter where your path towards better NHI governance, everyone at Workload Identity Day Zero agreed that having insight into your current inventory of workloads and machine identities is mandatory. We at GitGuardian firmly believe that the future can mean fewer leaked secrets, better secrets management, and scalable NHI governance, but we can't get there without first understanding the scope of the issue in the org today. We would love to talk to you and your team about that.