David Spark -“Secrets such as passwords and credentials are out in the open just sitting in code repositories. They are not easily found by the developer appropriating the code, but malicious crooks intent on doing you harm will find them and use them to bypass your security controls. Why do these secrets even exist in public? What’s their danger? And how can they be found and removed? ”

This article is a curated version of a discussion held during a Defense in Depth episode. A panel of cyber security experts including Allan Alford (CISO @NTT Data Services), David Spark (producer CISO series) and Jeremy Thomas (CEO of GitGuardian) together discussed community comments on a Linkedin post centered around leaked credentials within git.

This discussion focuses on the threat of secret sprawl inside git at two levels: first corporate secrets leaked inside public repositories (both personal and professional public git repos), and second, secrets inside private repositories and the threat that it creates.

Why do secrets and credentials leak inside git?

David Dos Neves - Munich Re “Human error is nothing you can avoid and prevent, especially if it is not an error but just laziness, or even provoked, implement a risk based approach and simply add many layers to prevent it in your whole lifecycle”.

This quote taken from David really captures a lot of what formed the discussion around secrets inside git repositories. It is not just about technology, there is also a human component. Automation around preventative and protective measures is key but so too is training.

While it was agreed that it wasn’t necessarily great that we call it laziness or provoked, the quote certainly highlights the human aspect of our topic.

Allan Alford - The vast majority of leaked credentials are mistakes and do not come from malicious intent. The reality of course, is that there are a million and one reasons credentials get leaked. Hardcoding credentials can be a temporary solution that ends up becoming permanent, sometimes, developers don’t realize the repo is public, sometimes the developers are new, and sometimes it’s a test that was forgotten about…. The list continues.

Roland Gharfine - Security Consultant “your process needs to protect your employees from their own mistakes by design”

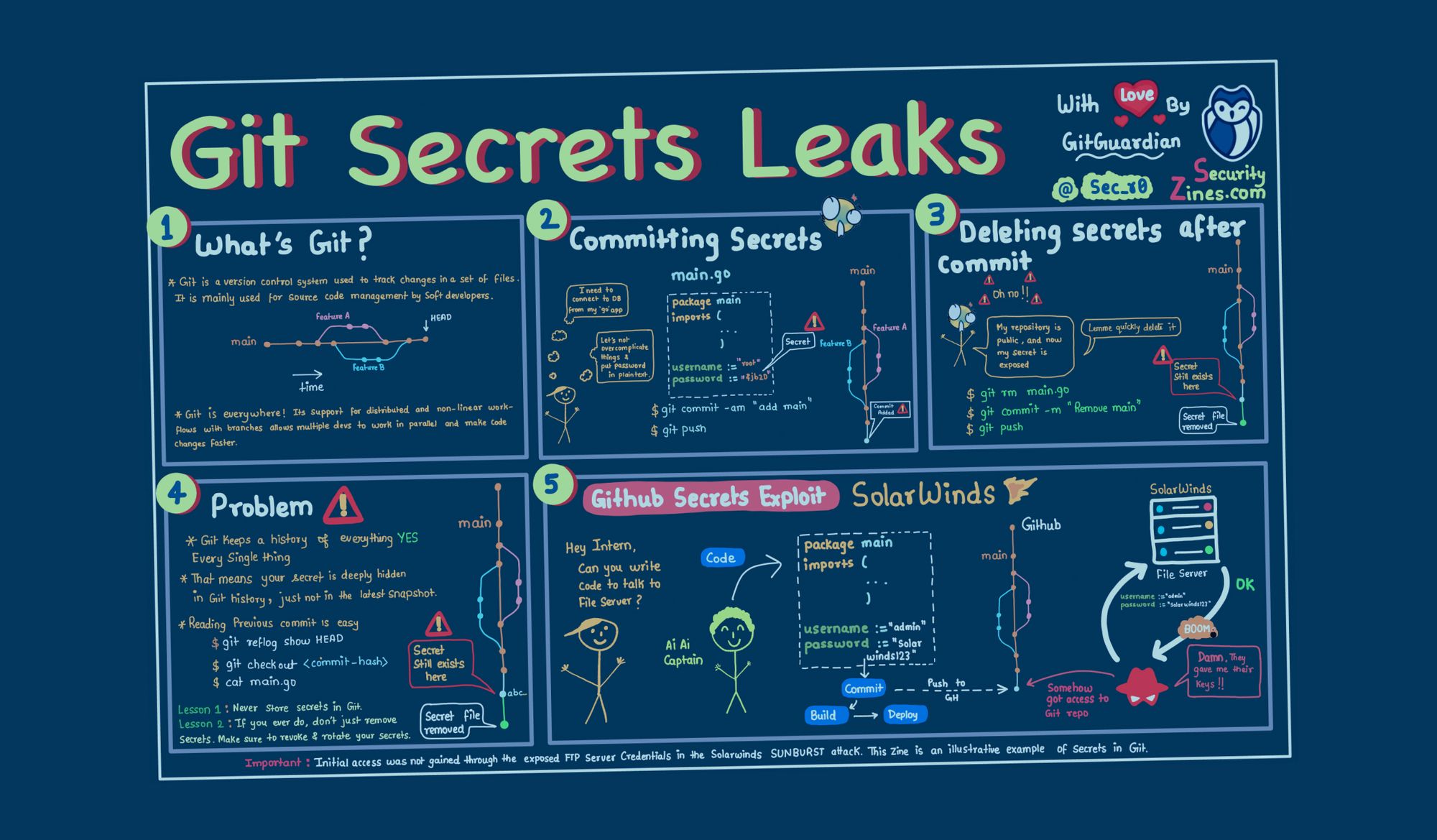

2023 Update: check out the amazing SecurityZine about secrets in git repositories to get a better grasp of why it's such a problem:

Jeremy Thomas - At GitGuardian, we observe many counterintuitive facts about leaked secrets. First and foremost, most leaks concerning corporate credentials, actually happen on personal GitHub accounts. Not the accounts of the corporation. Personal public GitHub accounts are a place where companies have no authority to enforce any kind of preventive security measures. So we must assume that mistakes will happen, the question then becomes how do we deal with these. Training is part of the answer and technology is part of the answer. Technology must be used to automate what can be automated.

Edward Ziots - Aquanima “There is no one solution to solve this problem”

If (or when) secrets are leaked in GitHub, what protection layers can be implemented?

Allan Alford - Generally speaking, with a lot of environments, if your API key or credential is exposed, that's it, an attacker has access to that system. Once they are authenticated, it can be extremely difficult to be able to distinguish between a valid user and a malicious user. Now, of course there are other protection layers we can place on top of a system, but once a secret has been exposed, it is forever compromised and needs to be revoked and rotated. Version control systems (VCS) like git give great insight into the history or a project and who is contributing. They do not, however, provide an adequate audit log to show who has accessed them. There is no way of knowing if someone has already discovered the secret or secrets, so simply removing it from the history or deleting the repository doesn’t remove the threat.

Allan Alford “if you leave your keys to your house in the lock and you notice they are gone then you change the locks”.

Update 2023: check out the best practices one day you are faced with this situation (it happens to virtually everyone at some point!)

Who is responsible for scanning and alerting on leaked credentials and secrets?

Christopher Grange - Adapt Forward “The cloud provider should deliver notifications to the customer of the state of known bad connections practices so they have a choice”

There is some debate on who exactly should be responsible for alerting developers and account owners when a credential is exposed publicly. Some providers, including AWS state they go to “great lengths to protect your credentials” as outlined in their shared responsibility document. But this opens the debate as to if cloud providers do enough to protect your credentials leaking or if the responsibility falls back to the developer.

Allan Alford - If we look at the headlines we can point out that there was a now infamous breach involving Uber where an employee committed secrets into his personal public GitHub account. In this case it gave access to an Amazon S3 bucket belonging to the company. Surprisingly, in addition to Uber, Amazon themselves were called to the mat by the media for being responsible for the leak, and there was even governmental posturing directed at Amazon. But now imagine if instead of cloud storage we change the topic to physical storage in the form of a harddrive. Is it the hard drive manufacturers responsibility to stop you storing password or credentials inside it, I would hope the answer overwhelming would be no.

Jeremy Thomas - There is no way that providers can be held responsible for credentials leaking in the public space or exposed in internal repositories. Certain providers have the ability to detect certain malevolent patterns. But this is not enough to prevent the abuse of credentials and does not mean this is OK to hardcode credentials in repos. We can use the analogy of banks. Of course it’s great that banks have anti fraud systems in place to protect users from credit card fraud. But just because they have these systems doesn’t mean it is okay to store credit card numbers in plain text within databases. Equally this is true when it comes to managing credentials for service providers.

Now this is not to say that service providers shouldn’t do anything to help the problem but it is not a complete and comprehensive solution for an entire environment.

Jeremy Thomas - Providing anomaly detection to detect fraudulent activity is complementary to GitGuardian because there is many ways to compromise credentials; they are not necessarily compromised because they were hardcoded in some insecure places. So the cloud provider might be able to detect some things that GitGuardian would not be able to do this way. But on the other side detecting credentials hard coded in source code is important as well. Because once an attacker has the credentials to act like a valid user it can be extremely difficult to detect this. So GitGuardian will detect things that won’t be detected using anomaly detection.

Jeremy Thomas - We also need to take a step back and look beyond just cloud providers. When we talk about secrets the first environments that always come to mind are AWS or GCP or Azure. But secrets aren’t only used to manage cloud infrastructure; in fact it’s much broader than that, GitGuardian customers on average manage 150 different services.These include not just external micro services but also internal services, things like: SaaS platforms, Cloud infrastructure, databases and so on. All these services require secrets to be interconnected, if we lay the responsibility on cloud providers, we would need to also lay the responsibility on every service that uses a secret which simply is not feasible.

Update 2023: the shared responsibility model is at the heart of DevSecOps; download the (free) white paper:

How big is the problem, actually?

Jason Keirstead, IBM - “For git and GitHub this is nearly a solved problem if only people would follow the best standard practices.”

Allan Alford - The “if only people followed best practices” as outlined by Jason is an argument frequently heard throughout security discussion. It is an argument that debatibly could be used for absolutely every aspect of security. Even though we know intellectually this is not realistic, it is still, actually, a valid point. To effectively promote best practices, security needs to be more than an afterthought, it needs to be embedded into the culture. Getting security cemented to the developers concern matrix. Developers have deadlines, time pressures, a QA team on their heels. There are a bunch of variables and factors that are driving and pushing them, but only a few cultures have security as one of those driving forces. So the “If Only” argument is actually a fair statement and “If Only” can be tackled to a large degree. But there are always going to be mistaken, so the “If only” is a really, really big “if only”.

Jeremy Thomas, GitGuardian “I would challenge anyone saying that this is a solved problem for any VCS provider.”

Jeremy Thomas - To get an idea of how big the problem remains, we can take an indication from VCS themselves. Generally, an enterprise VCS is worth $20 USD per developer per month. Both GitHub and GitLab are now also packaging certain security features into the plan with a base price of $100 per developer per month. That's $80 per developer per month on top of just the VCS. What this shows us is that securing a VCS is worth 4 times more than the VCS itself.

Fortunately, today, companies don’t need to fall back on the VCS itself to solve the problem; companies now have the choice between an all-in-one platform with basic security capabilities like GitHub and GitLab are offering. Or multiple niche vendors that are the best at what they do. An example being Snyk for detecting vulnerabilities in dependencies and GitGuardian for detecting secrets in repositories.

Update 2023: To get an idea of the size of the problem, have a look at the State of Secrets Sprawl

What about Zero trust environments?

Abhishek Singh - Araali Networks “Eliminate the problem, authenticate your users and apps and allow privilege on a need to know basis. AKA zero trust. not because of possession of password.”

Steve Martinez - Driven Automation for the Cloud “If keys are being rotated in this fashion the issue of keys being left open or exposed on a developers desktop quickly becomes non issues.”

Jason Keirstead, IMB “The value of any secret is highly correlated to it’s half life.”

Allan Alford - In an ideal world, using zero trust and not relying on passwords and secrets alone is a fantastic practice to put in place. The same can be said for regularly rotating keys, and we should strive for this. But the reality is that this cannot be achieved with all secrets and is sadly not the current state of the world. When you factor in the hundreds of different environments being used, each with individual keys, some of these will allow for additional security layers to be added on top to achieve a zero-trust framework. But many will not have this capability.

Jeremy Thomas - It would be in fact, great if API keys or passwords wouldn’t exist any more but the trends we are seeing are showing quite the opposite: developers are handling increasing amounts of secrets on a daily basis because the way software is being developed has radically changed. Applications are no longer standalone monoliths; they rely on hundreds of internal and external services. Zero trust cannot be implemented on such a vast and ever-evolving perimeter. It is the current state of the world that needs to be secured, not the ideal state of the world.

Not all credentials can be created short-lived. In fact the vast majority can’t and behind a short-lived credential, there’s always a long-lived one. It’s an infinite regression problem.

The issue of secrets shared in messaging systems

Jeremy Thomas - A predominant pentester recently shared with me some of his strategies and playbooks. It was extremely interesting to find his favourite playbook was to gain some initial access inside the internal messaging system, Slack for example, by using clever phishing techniques. And from there he was able to literally move laterally everywhere because of secrets that he could harvest in the messaging systems. This shows the extent of the problem with secrets being exposed. It is not just them being hard coded in source code, there are hundreds of secrets in our messaging systems today.

People always need to pass a secret key or password, and we are looking for secure ways. But often, they get sent through emails they get sent through Slack. Unfortunately, this is the current state of the world that needs to be secured. In the past and still today, secrets have been shared everywhere, from storing them in plain text in a VCS, sending them via messaging platforms like Slack, and feeding them into a script that shows up in the SIEM. If you hold onto history and revisions, these will exist long after they are deleted.

Closing Notes

Jeremy Thomas says it best: “Source code is an asset that is leaky in nature, it’s made to be cloned in multiple places and you never know where it’s going to end up.”

You need to have good practices and security embedded into the culture and the developers' concern matrix. But despite this, the problem of secrets leaking is, at its core, a human issue making it very hard to eliminate. Ultimately the responsibility falls on developers and organizations to implement safeguards in a way that protects developers from themselves. Secrets don’t just end up in VCS like git, they are also passed anywhere code is passed, on messaging platforms, in Jira tickets, in wikis, servers, personal or professional workstations and you must make sure secrets aren’t stored in places that aren’t specifically designed to do so.

About the speakers

Allan Alford

High-Energy Executive Fusing Enterprise Security with Product & Services Security.20+ Years of Leadership Experience in the following industries: Telecommunications, Data Services, Tech, Consultancies, Security, Startups, Legal, Education.

Leverages startup and intrepreneurial leadership experiences to streamline costs and grow revenue.

David Spark

Owner of CISO Series, a media brand specializing in cybersecurity. We currently have four shows on our network (three podcasts and a weekly live video chat).Also owner of Spark Media Solutions, B2B content marketing agency for the tech industry.

Jeremy Thomas

Co-founder and CEO of GitGuardian, a company that helps developers and security teams secure their modern software development process with automated secrets detection.