The idea of credential theft is not new, for a long time it has been a well-known adversary technique described in the MITRE ATT&CK framework. In recent years, data breaches as a result of leaked secrets (what are secrets in the software development world?) inside git repositories, private and public, have become a real threat. Thanks to high-profile cases, such as Uber’s data breaches from 2014 and 2016 we are now all aware of the risk of exposed secrets inside git repositories. We should all agree that automated scanning of code for secrets is now essential in modern software development.

As this problem is identified, there are tools available to help implement automated secrets detection in the devops pipeline. This includes commercial tools like GitGuardian, open-source solutions like gitleaks or truffleHog, but there is also a third option, building a custom internal solution. This article won’t offer an opinion on what is the best way to go, instead, we will look into the case study of SAP and explore the decisions and challenges they faced when building their own internal credential scanning solution.

The challenge of scanning git repos for secrets

Large organizations can be segmented into hundreds of development teams, each with hundreds of git repositories that contain thousands of commits. This can result in a huge amount of data. You also need an effective way to be able to communicate with relevant stakeholders, open threat cases and track their progress through until the threat is mitigated. Let’s look at each component we need to create for an effective in-house solution to scan repos for secrets.

- Detection method - What method of scanning will you implement (entropy or regex)?

- Data extraction and scanning method - How will you break apart and scan data efficiently?

- Post validation - How shall you verify the results?

- Reporting - Who and how will you report potential leaks to?

- Remediation - What information and remediation support will you provide?

- Tracking - How will you track the progress of the threat?

SAP security automation as a case study: how to scan GitHub repos for secrets

As mentioned above, this article will look at SAP and review the decision and challenges they faced in implementing their in-house secrets detection solution.

SAP is one of the largest enterprise software vendors in the world and has 30,000 developers. As you can imagine with this many developers there is a huge number of git repositories. To date, SAP has 250,000 repositories as well as roughly 5 TB of compressed source code.

SAP has been pushing for more inner-source collaboration, meaning they would like to make internal repositories open to all employees. They decided they needed to implement automated scanning to be able to detect if any of these repositories have secrets inside them and mitigate any threats.

As Tobias Gabriel from SAP stated

“It happens, and probably has already happened to everyone [sic]of you, myself included, that you by accident committed some file, pushed it up and never come [sic] back to clean it so you have some credentials leaked there. While in private repositories this is not critical, since we are opening up the repositories we wanted to reduce the risk there as much as possible”.

Detection method: how to find credentials in git at the scale of SAP, where open source scanners like gitleaks fail

Nikolas Krätzschmar from SAP who led the development of the scanner stated that different open-source solutions were evaluated. Gitleaks or truffleHog are examples of available open source solutions.

“Scanning for credentials inside git is not a new problem so there exists a handful of tools already out there” .

One of the tools evaluated was an open-source tool, gitleaks which Nikolas admitted uses a more sophisticated method of detection by also implementing entropy measurements detection and not just regular expression. However, Nikolas stated from a computing performance perspective the tools “were simply not performative enough at our scale” So the decision to build an in-house solution “that took inspiration from existing tools but had a more performance approach”.

Nikolas did not say if any enterprise tools were evaluated in the process.

As a way to increase the precision of the scanner, Nikolas explains they “opted to limit our search to just static patterns that could be easily identified by regular expressions as this appears to be sufficient for most types of our authentication tokens”. An example being that all AWS keys and Stripe keys begin with the same leading character sequence as well as Google certificates which have some constant fields to match against.

In total the SAP scanner was able to identify 5 different credential types:

- AWS keys

- GCP Certificates

- Private keys

- Slack tokens and WebHook URLs

- BitCoin keys

(For comparison the GitGuardian scanner detects 200 different API types)

The advantage of this method is that there is a high percentage of true positives. The obvious negative is the very limited scope of secrets that can be detected. Adequate detection needs to cover all or at the very least the majority of secrets used in the organization. While this differs between each project it is normal for large projects to use hundreds of different services resulting in hundreds of different secret types.

Data extraction and scanning method: how to scan GitHub repositories for secrets, when there are tens of thousands of them

One of the first major problems that they were faced with was determining how to extract all the data from the repositories. Git offers two main ways of doing this, extracting the patches from the history or alternatively extracting entire blobs.

| Patches | You can use git log --full-history --all -p This will show you the full history and display the patches between each commit. |

| Blobs | The other option is to use git rev-list --all --objects --in-commit-order |....| git cat-file --batch This doesn’t extract the patch, instead extracts the entire file for each change. |

Extracting just patches has the clear advantage of having a drastically reduced size, however, comes at a cost of time.

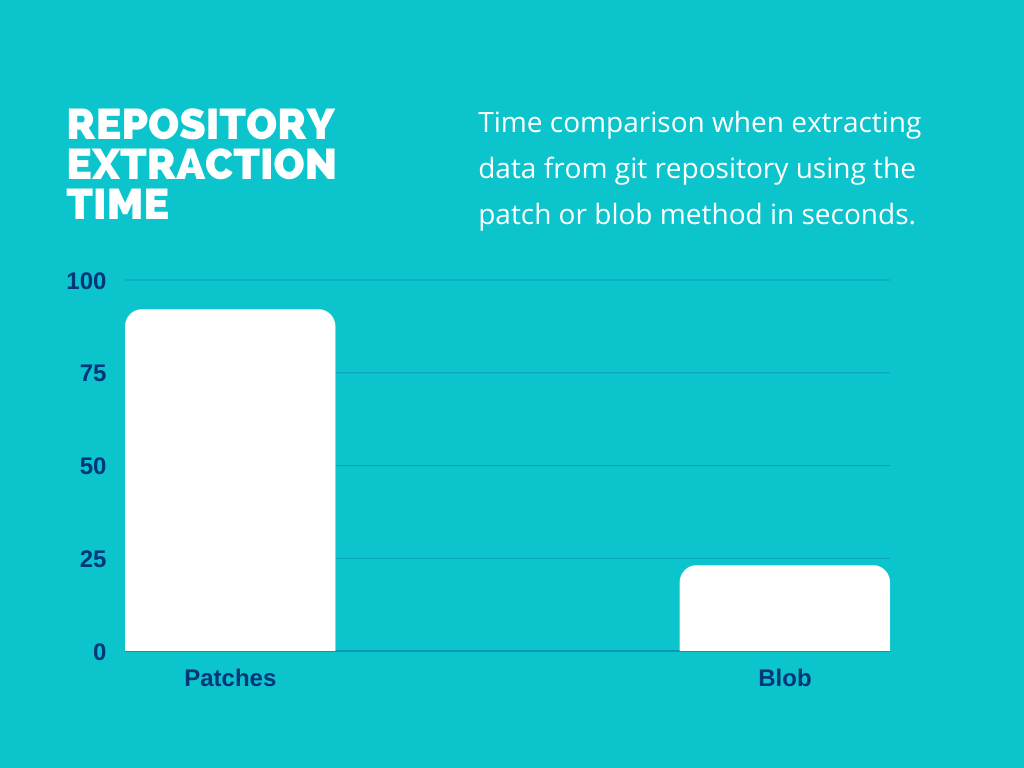

To decide which scanning method to use, SAP used a random sample of 100 repositories to evaluate the performance of each method.

In the test data set, extracting using the patch method took 92 seconds compared to just 23 seconds using the blob method. However, because the blob method generates a much larger output, deciding what method to use will largely be based on the scanner throughput capabilities.

Pattern matching

The next major challenge that needed to be overcome was deciding what tools to use for the regular expression pattern matching.

“During some initial research we found out that standard grep was simply not up to the task primarily due to its lack of multiline pattern support” Nikolas Krätzschmar

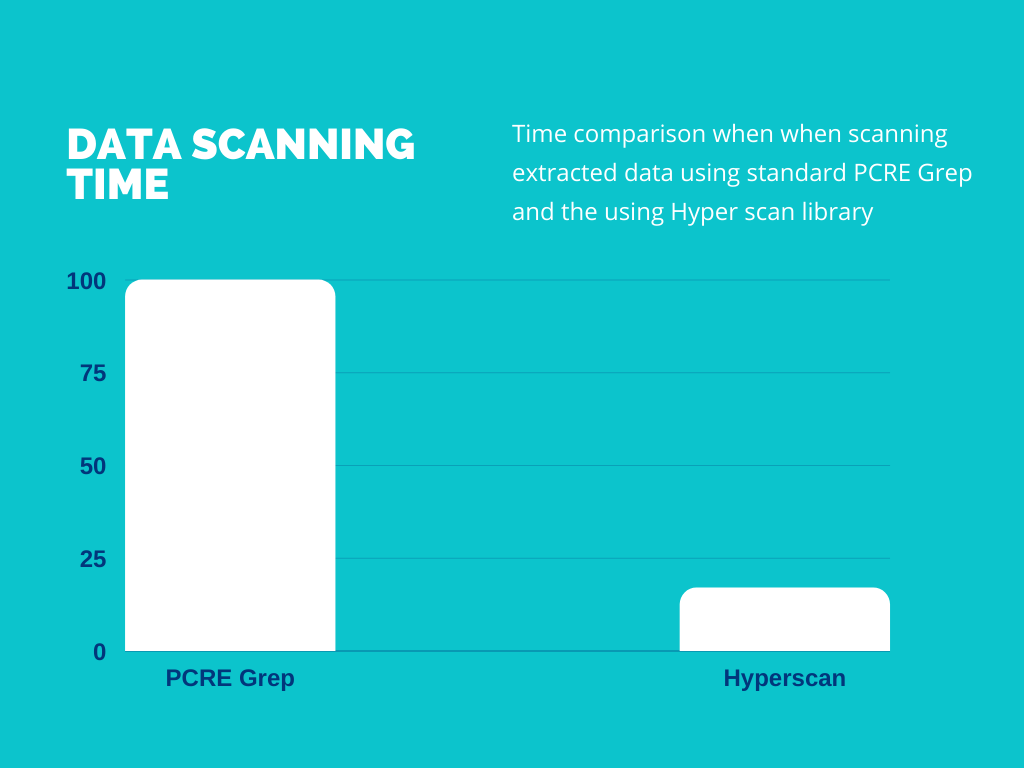

In the end, it was PCRE (Pearl Compatible Regular Expression) grep that was used due to its support for multiline patterns as well as support for some more complex patterns. With that, they were able to scan all the extracted blobs from the 100 test repositories in just over 100 seconds.

Another tool that was introduced thanks to some support from the GitHub professional services team was the Intel hyperscan library.

“This is a high performance regex library that works by pre-compiling patterns and tuning them to a specific CPUs microarchitecture by using vector instructions and some other magic optimizations” Nikolas Krätzschmar

This library resulted in a huge performance boost bringing down the time to scan the 100 repositories from 100 seconds to just 17 seconds.

What these results show is that the scanner throughput is not the limiting factor therefore the better of the two options of extracting the data is using the cat (blob) method.

“Therefore the option of just putting all objects using the cat method should be preferable because the slightly longer scanning time needed to perform pattern matching on the additional data is more than made up for by the time saved not computing difference between files. “ Nikolas Krätzschmar

Putting both together it takes 40 seconds to extract and scan all the contents of the 100 test repositories.

Reducing scan load

Another observation made by the SAP team was that a large amount of time was spent on a select few files. These were all binary blobs or some otherwise auto-generated files that probably shouldn’t have been checked into git in the first place. After evaluating the files it was observed that these had a low likelihood of containing secrets so the decision was made to ignore these files. This was implemented using a filter to skip over any file over 1mb which reduced the run time to just 22 seconds down from 40.

Centralizing the data

Now we arrive at the third challenge of scanning secrets, how do you centralize all the data from 250,000 repositories.

“First we considered cloning them to a seperate machine and scanning them there like we did for the test repositories. But as you can imagine that quickly ran into a couple of issues. Primarily how to get access to all the repositories, including the private ones, but more importantly this approach would be equivalent to ddosing our own github instance. “ Nikolas Krätzschmar

Not only would this need to be done each time a scan is done, it also would take much longer than the scanning itself. Luckily in the case of SAP they already had a centralized location of all data, the backup server.

“Running on the backup we have direct access to all repositories, while at the same time, not taking away resources on the GitHub production instance. “ Nikolas Krätzschmar

The actual scanning was run in parallel for each repository basis. The main thread retrieved the list of repositories to scan and assigned them to the workers subprocesses in a round robin fashion. Doing it this way achieved good enough load balancing that the team decided there was no need to implement any more complicated scheduling techniques.

With the setup working on 128 worker threads, a full scan of the entire 5 terabytes of compressed repository data took four hours. Leaving them with a list of findings, each potentially being a leaked secret.

Post processing: how to check the validity of credentials before sending alerts

With the scanner in place and running on a daily basis, the team was able to get a list of secrets that matched their regex patterns.

“Probably to no one's surprise that we found quite a few secrets, much more than one. In fact so many that we did not want to implement a follow up process.” Tobias Gabriel

So many secrets were discovered that additional steps needed to be put in place to be able to determine if the secrets were valid or not.

In order to determine if the keys were indeed valid, they decided that where possible, they would test each key.

Using an actual example from SAP.

Below are two Slack tokens discovered using the SAP scanner. At the time one was valid and one was not. But without testing the keys there is no way of distinguishing between them.

https://hooks.slack.com/services/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 (Valid example)

https://hooks.slack.com/services/T027ZUPDT/B06fc8xln/ERIOXW7KQH7NHAQHGOVSDQB4 (Invalid example)

Slack WebHooks however contain a secret value at the end, so you only need this URL to be able to post a message to a channel without any additional information.

“What you can do is just try and send a message to them and see if it's valid, if you get an error message then you know that it is invalid.”

“In our case the majority of the web hooks that were matched were in fact valid and active. “ Tobias Gabriel

The same process of verification can be also applied to other service credentials like AWS cloud tokens and GCP certificates.

Mitigation: how to route the alerts to the right persons

Now we have a scanner that runs on the backup server and returns a list of credential candidates that are verified. Following that, we have a list of leaked credentials.

The next challenge SAP faced was, with so many results and so many development teams it would create a large workload to manually report leaks. Instead, a full service called the Audit Service was implemented. This service takes the findings and then automatically notifies the responsible service owners. If the owner of the account (for instance the AWS cloud account owner) can be identified they were notified directly but in the case of more generic keys like private keys, they opted to notify the repository owner.

Revalidation: how to implement controls to check that leaked credentials were properly remediated

The very last step is crucial to the maintenance and effectiveness of the tool. This is an automatic revalidation of all the credentials and potential patterns to be matched every day.

The results are scanned again, this time to check if the credentials have indeed been remediated.

“This takes the burden from the development colleagues to manually mark credentials as mitigated or not and don’t bug teams about credentials that are no longer valid. We can also make sure that if credentials do not get mitigated in time we can take a look at this ourselves.” Tobias Gabriel

Feedback about automating secrets detection and remediation at the scale of SAP

One of the most interesting insights in this case study is the feedback from the colleagues, what things were good and what needed to be improved.

“The most important thing we noticed is that you need to try and have as few false positives as possible.” Tobias Gabriel

Probably already everyone has had a security scanner that sends out 300 messages and ends up with only 2 of them being valid. The result of this is that development teams end up ignoring the messages. So it was of the highest importance to SAP to ensure they have as high of accuracy as possible.

The key takeaways the team at SAP to increase efficiency were:

- Verify credentials as soon as you have them, this includes checking them against a central service if there are for some services.

- Ignoring results from the dependency folders. It was discovered that a lot of dependencies like Vendor for Go or Gears for Node JS had flagged secrets inside them. The decision was made to ignore them. The logic behind ignoring this was that the leaked credentials were probably imported from other sources like github.com, if they are valid credentials then they probably already should have been mitigated at the source.

The second really interesting takeaway was the response to the notifications.

“The first question we receive is yeah…. And what should we do now?” Tobias Gabriel

It was important to include relevant guides in the notification. Things like: how to check the Cloud Account Log, how to rotate relevant cloud credentials.

In addition to this, it was also discovered to be very important to have somebody they can reach out to if they have questions or if they have noticed abuse of the account so that it can be escalated and properly handled and doesn’t end up in a void.

Conclusion from GitGuardian

Using SAP as a case study provides great insight into the complexity, challenges and considerations you must make when deciding to build an in-house secrets detection solution. It is a complex undertaking that needs to be constantly maintained and monitored and must include a dedicated team to help mitigate and keep the tool maintained. The steps must include: an effective detection process, a centralized and extracted data set, an efficient scanning method, post processing and validation of the results, dedicated automated notification and revalidation of the credentials to keep track of progress.

Before undertaking a task yourself, you should always consult the available solutions including the open-source options such as truffleHog or gitleaks but also commercial applications such as GitGuardian.

Implement Automated Secrets Detection in your Git Workflow