In our latest State of Secrets Sprawl, we shed light on some interesting findings about secrets accidentally published to public repositories over the past year.

While the sheer number of secrets we discovered on GitHub was certainly noteworthy, what really caught our attention this year was what happened after we identified the exposed secrets. In this blog, we are going to explore the concept of zombie leaks and why they deserve more attention.

What's a Zombie Leak?

We coined this term after a startling (yet somewhat unsurprising) observation: repository owners often react to a sensitive leak by either deleting the repository or making it private, with the idea of cutting off public access to the problematic information.

The problem is that in doing so, they can create one major security gap for themselves or the organization they work for: they can create a “zombie leak.”

A zombie leak occurs when a secret is exposed but not revoked, remaining a potential attack vector. The commit author may believe that deleting the commit or repository is sufficient, overlooking the crucial revocation step.

Let's be clear from the onset: the only way to mitigate the risk of a secret being leaked is to remove any permissions associated with it. That's exactly what revoking means in that context. By doing so, we render the secret as harmless as any character string.

Deleting that leak, on the other hand, has absolutely no impact on your security posture since you do not have control over the "sprawling" of that secret (yes, even if the secret was leaked within a "private" perimeter). Worse, it can eventually make the work of security specialists looking to 'patch' this kind of vulnerability harder, if not impossible, because the leak is now invisible.

Imagine this scenario: a software engineer accidentally commits the .env file containing production secrets of their company to their personal GitHub repository. Quick to realize their mistake, they make the repository private. Feeling guilty and shameful about this mistake, they are reluctant to disclose the incident to their security team and reckon that their reaction was fast enough to mitigate any risk of a compromise. Who would be able to find this needle in the haystack that is GitHub anyway?

Months later, the company's security team is conducting an audit to evaluate its exposure on GitHub. To that end, they send a security questionnaire to all the software engineers, requiring them to declare whether they have a GitHub account. Based on that, the security team then proceeds to scan all public repositories for leaked credentials. Here is the thing: they are going to miss the leaked .env since that file has now disappeared from the public part of the platform. This vulnerability has now escaped their radar unless they are using a tool able to query the global GitHub history.

On the other hand, a malicious actor could've detected the .env file and the credentials hard-coded in it (our stats show there's a more than 50% chance this file extension contains valid secrets). They have now plenty of options to exploit them to achieve their objectives.

This is a textbook example of a zombie leak: the secret is out there, but nobody (wishing you good) is actively monitoring it or taking steps to revoke it.

Zombie Leaks are Probably Very Common

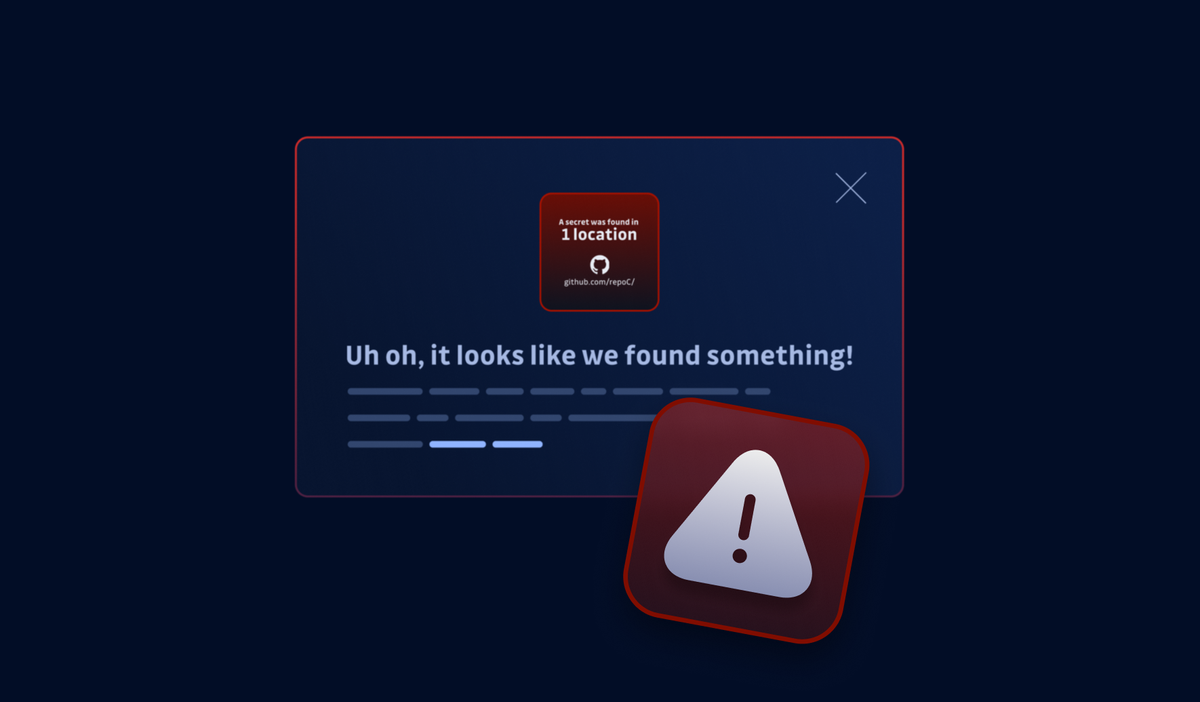

GitGuardian's pro-bono service alerts repository owners when we discover a leaked secret in their public Git commits. Over the next five days, we'll perform repeated checks to determine whether those secrets remain valid.

The State of Secrets Sprawl highlighted two concerning trends: first, we found that over 91% of leaks remained valid after 5 days. Second, many repository owners deleted or privatized their repositories after notification but did not revoke the leaked secret. Randomly selecting 5,000 repositories where the secret was gone within 5 days showed that 28.2% of the repositories were still public while 71.8% had been deleted or made private.

While we can only speculate about the prevalence of zombie leaks, there is certainly evidence suggesting they're fairly widespread.

The Bottom Line: Leaked Secrets are High-Risk Vulnerabilities

When discussing secrets, it's crucial to remember that any active hard-coded secret represents a high-risk vulnerability until revoked. While this may seem obvious, it's important to prioritize this type of vulnerability when comparing it to other application risks, such as the well-known OWASP Top10 or the recently introduced OWASP Top 10 Risks for Open Source Software.

What this means is that a leaked secret doesn't stop being a threat when:

- the application where it is embedded stops running,

- the code where it is hard-coded gets overwritten or made private,

- the message where it was shared is deleted,

- the disk where it is stored finally gets encrypted.

Why a high-risk vulnerability? Let's examine the industry standard for ranking the characteristics and severity of software vulnerabilities, the Common Vulnerability Scoring System (CVSS). The highest-ranking risk level is defined like that:

Critical (9.0 to 10.0): These vulnerabilities are usually easy for an attacker to exploit and may result in unauthorized access, data breaches, and system compromise or disruption. As with high-risk vulnerabilities, immediate attention and mitigation are advised. The major difference between critical and high-risk vulnerabilities is that exploitation of a critical-risk vulnerability usually results in root-level compromise of servers or infrastructure devices.

Leaked credentials that remain active fit this definition precisely and are critical vulnerabilities at best.

How to Stop Secrets from Becoming Zombies

Revoke them. It's easy to say, but not so easy to do. But it's got to be done. Here's a high-level overview of the process in three steps.

- Get your secrets management sorted. This could be as simple as using a

.env, removing it from version control, or referring to an external secrets manager like CyberArk, AWS Secrets Manager, or HashiCorp Vault. - Generate a new secret to replace the leaked ones. Test your refactored code thoroughly to make sure everything works properly with the new secrets and secrets management tools.

- Push the new code to production, taking the old secret(s) out of use.

- Revoke the leaky secret and remove all its associated permissions

For more detailed guidelines on the remediation procedure, take a look at this handy cheat sheet:

If you need further information on remediating some of the most commonly leaked secrets, GitGuardian offers guides for a dozen of the most common secrets, ranging from AWS keys to SMTP credentials. And if there's one thing we cannot stress enough, it's to implement practices and procedures to discover and remediate leaked secrets before they become a lurking danger to your company and your customers.

Now that you know your zombies, better get hunting before they come back to haunt you!