- Covers best practices for managing secrets, pod auto-restart, and version propagation in Kubernetes.

- Explains how to eliminate manual CI scripts, reduce risk of secrets exposure, and ensure compliance by making Git the single source of truth.

- Essential reading for security, DevOps, and platform teams seeking robust, scalable deployment pipelines.

As Kubernetes (K8s) becomes a mainstream choice for containerized workloads, handling deployments in K8s becomes increasingly important. There are many ways to deploy applications in K8s, such as defining Deployments and other resources in YAML files, using Kustomize for template-free customization, or leveraging Helm charts as a package manager for Kubernetes.

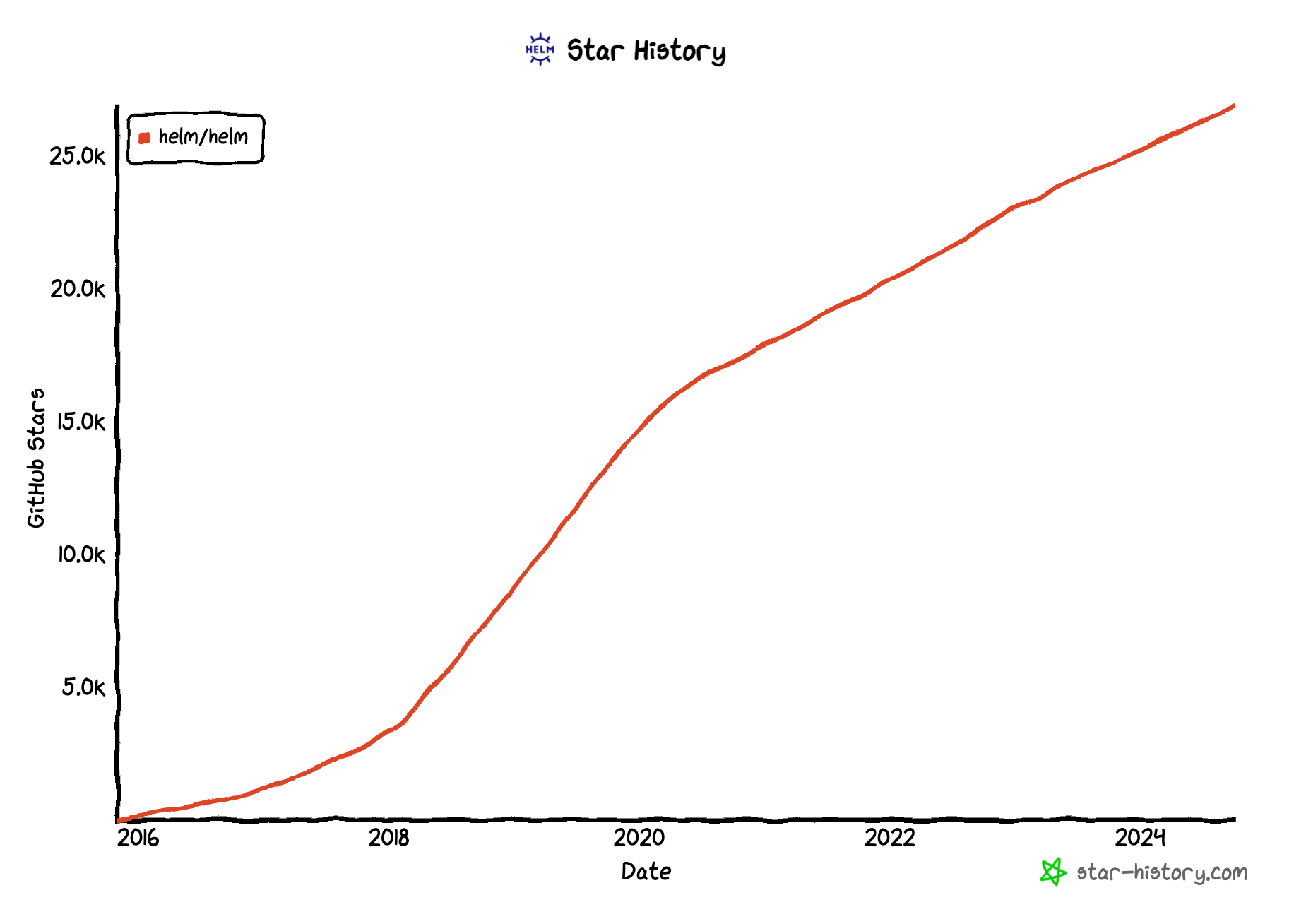

Helm is one of the most popular choices due to its flexible templating, simple package format, and user-friendly CLI. However, in a modern, automated software development lifecycle (SDLC) that embraces DevOps and DevSecOps principles, manual Helm CLI commands are insufficient. Automation is essential.

This article examines a secure, production-ready integration between Helm and continuous deployment (CD) tools, focusing on automation, secrets management, pod auto-restart, and version propagation in Kubernetes environments.

1 - Using Helm with Continuous Integration (CI): A Real-World Example

In 2022/2023, while working in an SRE team managing dozens of microservices, we used GitHub Actions for CI/CD and Helm for deployments. Although the tooling was robust, the deployment process was less so. Key challenges included:

- The CI workflow needed access to the K8s clusters where the deployments would happen. To solve this, custom runners pre-configured with access to K8s were used specifically for deployment-related jobs.

- The CI workflow was essentially a script running Helm commands, except it wasn’t a script but a workflow defined in YAML.

- This means, the deploy logic was backed in the CI workflows triggering Helm commands with command line parameters whose values (like secrets and versions) were fetched by other steps.

- To fetch secrets securely, some custom script was required to read from a secret manager before deployment and set the values on the go.

- To avoid copy-paste of the workflow in multiple microservices, a central workflow is defined with parameters so that each microservice’s workflow can dispatch the central workflow (if you are familiar with GitHub Actions, you probably know what I am talking about, and yes, you are right:

workflow_dispatch).

You might not be using GitHub Actions in your team, but the above setup probably looks quite familiar, well, to basically everybody, because no matter what CI tools you use (be it Jenkins or GitHub Actions or whatever takes your fancy), the problems need to be solved are essentially the same: handle access to clusters, use custom Helm commands with values to deploy multiple microservices, handle secrets and versions securely and effectively, all of which must be done in a human-readable, do-not-repeat-yourself, maintainable way.

Next, let’s look at an alternative way that is much more maintainable.

2 - A Very Short Introduction to GitOps

GitHub Actions (as well as many other CI tools) is a great CI tool that excels at interacting with code repositories and running tests (most likely in an ephemeral environment). However, great CI tools aren’t designed to deploy apps with Helm in mind. To deploy apps with Helm, the workflow needs to access production K8s clusters, get secrets/configurations/versions, and run customized deployment commands, which aren’t CI tools’ strongest suit.

To make deployments with Helm great again, we need the right tool for the job, and GitOps comes to the rescue.

3 - Argo CD: GitOps for K8s

One of the most popular GitOps tools is Argo CD, a declarative, GitOps continuous delivery tool for Kubernetes. With Argo CD, you define an application in YAML, specifying the code repository and Helm chart. Argo CD then takes over, handling deployment and lifecycle management automatically.

3.1 Prepare a K8s Cluster

To demonstrate Argo CD, create a test environment. For local development, minikube is a convenient choice. Alternatively, use eksctl to create an AWS EKS cluster.

3.2 Install Argo CD

Install Argo CD in your cluster:

kubectl create namespace argocd

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

Install the Argo CD CLI for your OS. Retrieve the admin’s initial password and port-forward the Argo CD server to localhost:8080 for access.

argocd login localhost:8080

3.3 The Sample App

For demonstration, use a simple hello world app (source code here, Docker images here, Helm chart here). The Chart.yaml sets appVersion to the image tag to deploy. To update versions, commit changes to this field—Git remains the single source of truth.

3.4 Deploy the Sample App

Create an Argo CD application by defining it in YAML and applying it with kubectl. Argo CD will synchronize with the Git repository and deploy the application to the specified cluster and namespace.

3.5 Sanity Check

Verify deployment progress in the Argo CD UI or port-forward the deployed app and access it locally. Under the hood, Argo CD uses the Git repository as the single source of truth, finds the Helm charts, and synchronizes them to the cluster. This is the essence of GitOps.

4 - Handle Helm Secrets Securely with GitOps/CD

With GitOps, deploying an app requires only a declarative YAML file—no CI workflow. But how do you handle secrets securely?

In the CI-based approach, secrets management is complex:

- Secrets cannot be part of the Helm chart.

- The CI workflow needs access to a secret manager.

- Secrets must be injected into Helm charts via command-line flags, which is error-prone and hard to maintain.

With GitOps, these issues are resolved:

- The GitOps tool runs in the cluster and can securely access a secret manager using the platform’s identity.

- External Secrets can be referenced in the Helm chart, pointing to secret paths without exposing sensitive data. The external secret is deployed automatically.

- No scripts or pipeline code are needed—Git remains the single source of truth.

In summary:

- The External Secrets Operator retrieves secrets from secret managers like AWS Secrets Manager, HashiCorp Vault, Google Secrets Manager, Azure Key Vault, and injects them into Kubernetes as Secrets.

- External secrets are defined declaratively in YAML as part of the Helm chart.

- The apps/Deployments use K8s Secrets as environment variables, following the 12-factor app methodology.

- GitOps automates the rest.

For a deep dive, see How to Handle Secrets in Helm and the section on External Secrets Operator. For production integrations, see Read Secrets from a Secret Manager.

5 - Handle Pod Auto-Restart with GitOps/CD

In production, automatically restarting pods when secrets or configs change is essential. While adding an annotation with a timestamp or checksum is a common hack, it’s not ideal.

A better solution is Reloader, a Kubernetes controller that watches ConfigMaps and Secrets and triggers rolling upgrades on pods. Add the reloader.stakater.com/auto: "true" annotation to your Deployment’s metadata in the Helm chart template. GitOps will handle the rest.

For more, see How to Automatically Restart Pods When Secrets Are Updated and the official Reloader documentation.

6 - Handle Version Propagation with GitOps/CD

Production environments typically require multiple environments (test, staging, production), each potentially running different application versions. Using a single appVersion in Chart.yaml means all environments deploy the same version, which is not ideal for staged rollouts.

One workaround is to use different branches for each environment, but this is operationally complex. A better approach is to use multiple Argo CD applications, each with version constraints for its environment.

6.1 A Very Short Introduction to Argo CD Image Updater

Argo CD Image Updater integrates with Argo CD to automate container image updates. It monitors image tags, applies user-defined constraints, and commits approved changes back to the repository, keeping Git as the single source of truth. Argo CD then synchronizes the application with the new version.

For production, grant Argo CD write access to the repository using a GitHub App or SSH key.

6.2 Install Argo CD Image Updater

Install Argo CD Image Updater in the same namespace as Argo CD for seamless integration:

kubectl apply -n argocd -f

https://raw.githubusercontent.com/argoproj-labs/argocd-image-updater/stable/manifests/install.yaml

6.3 Version Constraints and Version Propagation

Define separate Argo CD applications for each environment, each with its own image-list annotation specifying version constraints. When a new image version matching the constraint is available, Argo CD Image Updater commits the change to Git, and Argo CD deploys the update. This enables controlled, auditable version propagation across environments.

Helm Deployment Security Best Practices

When implementing Helm deployment workflows, securing the deployment pipeline is critical to prevent supply chain attacks and unauthorized access to production environments. The deployment process involves multiple touchpoints where secrets, credentials, and sensitive configuration data flow through the system, creating potential attack vectors that must be addressed.

Build platform hardening is essential for Helm deployment security. Recent supply chain attacks have demonstrated that compromised build systems can inject malicious code into deployment artifacts. For Helm deployments, this means implementing endpoint protection on systems that build and package charts, restricting administrator access to chart repositories, and maintaining strict file system permissions on deployment infrastructure.

Securing deployment systems also requires zero-trust networking principles, where human verification through biometrics or hardware keys is required to access production Kubernetes clusters. This approach ensures that even if a developer's workstation is compromised, the Helm deployment process remains protected through isolated, verified access channels.

Troubleshooting Common Helm Deployment Issues

Helm deployment failures often stem from configuration mismatches, resource constraints, or networking issues that can disrupt continuous delivery workflows. Understanding how to diagnose and resolve these problems is essential for maintaining reliable deployment pipelines.

The most frequent issues include failed pod startup due to image pull errors, insufficient cluster resources, or misconfigured service accounts. When troubleshooting Helm deployment problems, start by examining the deployment status using helm status <release-name> and checking pod logs with kubectl logs. Resource conflicts often manifest as pending pods, which can be diagnosed using kubectl describe pod to identify scheduling constraints or resource limitations.

Network-related deployment failures typically involve service discovery issues or ingress misconfigurations. Use kubectl get events --sort-by=.metadata.creationTimestamp to view cluster events chronologically, helping identify the root cause of deployment failures. For GitOps workflows with Argo CD, monitor the application sync status and review the controller logs to understand why automated deployments might be failing or stuck in pending states.

Advanced Helm Deployment Strategies

Beyond basic Helm deployment patterns, production environments require sophisticated deployment strategies to minimize downtime and reduce risk during application updates. Blue-green deployment with Helm enables zero-downtime updates by maintaining two identical production environments and switching traffic between them.

Implementing blue-green deployment Kubernetes Helm workflows involves creating separate Helm releases for blue and green environments, using service selectors to control traffic routing. This approach allows for complete validation of new deployments before switching production traffic, providing an immediate rollback mechanism if issues arise.

Canary deployments represent another advanced strategy where new versions are gradually rolled out to a subset of users. With Helm, this can be achieved by deploying multiple releases with different replica counts and using ingress controllers or service mesh technologies to split traffic. Tools like Flagger can automate canary analysis and promotion, integrating seamlessly with Helm charts to provide progressive delivery capabilities that reduce deployment risk while maintaining continuous delivery velocity.

Summary

Traditional CI tools are excellent for continuous integration but are not optimized for continuous deployment with Helm. Challenges include cluster access, parameterized Helm commands, secrets management, and version control.

Continuous deployment tools with GitOps are purpose-built to address these challenges. By using Git as the single source of truth, all deployment logic, secrets, and versions are defined declaratively, eliminating the need for complex scripts or workflows. While this article focuses on Argo CD, the methodologies apply to other GitOps tools as well.

For a more visual walkthrough of the Helm/GitOps setup, see this detailed blog or watch the video demonstration.

We hope this article helps you advance your organization’s DevSecOps maturity and implement robust, secure, and scalable Helm deployment pipelines.

FAQs

How does integrating Helm with GitOps tools like Argo CD improve deployment security and reliability?

Integrating Helm with GitOps tools such as Argo CD centralizes deployment logic and configuration in version-controlled repositories, reducing manual intervention and human error. This approach enables declarative, auditable, and automated Helm deployment workflows, enforces least-privilege access, and supports secure secret management through native Kubernetes integrations, significantly improving both security posture and operational reliability.

What are best practices for managing secrets in Helm deployments within a GitOps workflow?

Best practices include using external secret operators (e.g., External Secrets Operator) to reference secrets stored in dedicated secret managers like HashiCorp Vault or AWS Secrets Manager. Avoid embedding secrets directly in Helm charts or Git repositories. Leverage Kubernetes RBAC and runtime identities to securely inject secrets, and ensure all secret definitions are declarative and version-controlled.

How can I troubleshoot common Helm deployment failures in a continuous deployment pipeline?

Start by examining deployment status with helm status <release-name> and reviewing pod logs via kubectl logs. Use kubectl describe pod for resource or scheduling issues, and check kubectl get events --sort-by=.metadata.creationTimestamp for cluster-level events. For GitOps workflows, monitor Argo CD application sync status and controller logs to identify root causes of failed or pending deployments.

How does Argo CD Image Updater help with version propagation in multi-environment Helm deployments?

Argo CD Image Updater automates container image updates by monitoring image tags and enforcing version constraints per environment. It commits approved version changes back to the Git repository, ensuring Git remains the single source of truth. This enables controlled, auditable version propagation across test, staging, and production environments in your Helm deployment workflow.

What strategies can be used for zero-downtime Helm deployments in production environments?

Blue-green and canary deployment strategies are recommended for zero-downtime updates. With Helm, maintain separate releases for blue and green environments or use canary releases with traffic splitting via ingress controllers or a service mesh. Tools like Flagger can automate progressive delivery, enabling safe, incremental rollouts and immediate rollback if issues are detected.

How can I ensure that my Helm deployment pipeline is resilient against supply chain attacks?

Harden build platforms by enforcing endpoint protection, restricting admin access to chart repositories, and maintaining strict file system permissions. Implement zero-trust access controls for production clusters, requiring strong authentication (e.g., biometrics or hardware keys). Regularly audit dependencies and automate security scanning for Helm charts and deployment artifacts.

How can I automate pod restarts when secrets or configuration change in a Helm deployment?

Use Kubernetes controllers like Reloader, which monitor ConfigMaps and Secrets for changes and trigger rolling updates on affected pods. Add the reloader.stakater.com/auto: "true" annotation to your Deployment manifests within Helm charts. This ensures pods are automatically restarted when underlying secrets or configs are updated, maintaining application consistency.