The NSA and CISA recently released a guide on Kubernetes hardening. Now that we explored the Threat Model and had a refresher on K8s components (see first part), let's dive into the recommendations of the Hardening Guidance.

1 Pod Security

Pods are the smallest deployable Kubernetes unit and consist of one or more containers. Pods are often a cyber actor's initial execution environment upon exploiting a container. For this reason, Pods should be hardened to make exploitation more difficult and to limit the impact of a successful compromise.

1.1 DO NOT Run Containers as Root User!

By default, many container services run as the privileged root user.

Every container is just a running process. Read more here if you want to know more detail about it.

A containerized process, just as any other Linux process, uses system calls and needs permissions. And that is why all the traditional Linux stuff like system calls, namespaces, control groups, permissions, capabilities are all related to containers.

If the process has root privileges and gets exploited, you are basically giving the root access to the hacker.

Preventing root execution by using non-root containers (configured when the image is built) or a rootless container engine (some container engines run in an unprivileged context rather than using a daemon running as root; for example, podman) limits the impact of a container compromise.

1.2 Immutable File System Whenever Possible

By default, containers are permitted mostly unrestricted execution within their own context.

You probably have some experience running kubectl exec to start a shell and get "into" the container and debug stuff, like creating some files, running some commands, and downloading things.

The thing is, a hacker who has gained access to a container can also create files, download scripts, and modify the application within the container, just like you did.

What k8s can do here is to lock down a container's file system, thereby preventing many activities.

A read-only root filesystem helps to enforce an immutable infrastructure strategy as well. The container should only write on mounted volumes that can persist, even if the container exits.

1.3 Image Scanning

When we build images, we either create them from scratch (not quite often) or build them on top of an existing image, pulled from a repository (FROM xxx, the first line of a Dockerfile.)

On the one hand, we should only use trusted repositories to build containers; on the other, image scanning is the key to the security of the containers that will be deployed from the image.

There are quite a few image scanning tools out there (for example, trivy), which can identify known vulnerabilities, outdated libraries, or misconfigurations, such as insecure ports or unnecessary permissions.

There are also numerous ways to integrate the image scanning process with your workflow. For one, you can easily incorporate the scan into part of your CI/CD pipelines; for another, you can use K8s admission controllers (a k8s-native feature that can intercept and process requests to the API calls after authn/authz but before the execution).

1.4 Pod Security Policy

With K8s PodSecurityPolicy (PSP), we can create rules to safeguard the issues mentioned above. For example, we can:

- Reject containers that execute as the root user or allow elevation to root

- Prevent privileged containers

- Deny container features frequently exploited, such as

hostPID,hostIPC,hostNetwork,allowedHostPath - Hardening applications against exploitation using security services such as SELinux, AppArmor, and seccomp

But be careful! As of 2021, the PSP is being deprecated in Kubernetes 1.21.

You may ask why. In short, PSP has usability problems that can't be addressed without making breaking changes. The usage is confusing, easy to go wrong, hard to debug. That's why it's phasing out.

PSP will continue to be fully functional for several more releases before being removed completely, though. In the future, as a replacement, there will be a "PSP Replacement Policy" (temporary name) which covers critical use cases more easily and sustainably.

The replacement policy is designed to be as simple as practically possible while providing enough flexibility to be helpful in production at scale. This means, if you haven't started with PSP, or your usage of PSP is relatively simple, you are fine. If you want to get started with PSP now, keeping it simple will save you time and effort. If you are already making extensive use of numerous PSPs and complex binding rules, you'd better use your next year to evaluate other admission controller choices in the ecosystem.

2 Network Security

2.1 Deny Access to Control Plane Nodes / ETCD

The control plane is the core of K8s and gives users the ability to view containers, schedule new Pods, read Secrets, and execute commands in the cluster.

Because of these sensitive capabilities, the control plane should be highly protected.

If you are deploying the cluster yourself, in addition to secure configurations such as TLS encryption, RBAC, and a strong authentication method, network separation can help prevent unauthorized users from accessing the control plane.

If you are using K8s as a service with cloud providers, you can still have some control by using security groups. For example, with AWS EKS, we strongly recommend using a dedicated security group for each control plane (one for each cluster), and not adding rules that are not absolutely necessary to the control plane security group.

The etcd stores state information and cluster Secrets. It is a critical control plane component, and gaining write access to etcd could give a cyber attacker root access to the entire cluster.

Etcd should only be accessed through the API server where the cluster's authentication method and RBAC policies can restrict users. With managed K8s service, you don't have to worry too much about it. Still, if you are running it by yourself, it's good practice that the etcd datastore runs on a separate control plane node allowing a firewall to limit access to only the API servers.

2.2 Namespace Separation

K8s namespaces are one way to partition cluster resources among multiple individuals, teams, or applications within the same cluster to achieve multi-tenancy.

By default, though, namespaces are not automatically isolated.

However, namespaces do assign a label to a scope, which can be used to limit access. For example, in RBAC rules and network policies, we can use the label to select wanted namespaces.

There are three namespaces by default, and they cannot be deleted:

kube-systemfor Kubernetes componentskube-publicfor public resourcesdefaultfor user resources

Note that user Pods should not be placed in kube-system or kube-public, as these are reserved for cluster services.*

2.3 Network Policies

By default, pods and services in different namespaces can still communicate with each other unless additional separation is enforced, such as by a network policy.

Network policies control traffic flow between Pods, namespaces, and external I.P. addresses. By default, no network policies are applied to Pods or namespaces, resulting in unrestricted ingress and egress traffic within the Pod network.

For example, if you have two namespaces, "teamalpha" and "teambeta," for two separated teams, and team alpha created a service "service-a" in namespace "teamalpha," if you have a pod in namespace "teambeta," you can still access the "service-a" simply by accessing the address "service-a.teamalpha" which is resolved by internal DNS.

To truly separate the network traffic between namespaces (and between services, pods, etc.,) we can use network policy to regulate the network traffic flow.

2.4 Secrets Management

Since K8s secrets contain sensitive information like passwords, we need to make sure the secrets are stored safely and encrypted.

If you are using K8s as a service like AWS EKS, chances are, all the etcd volumes used by the cluster are already encrypted at the disk level (data-at-rest encryption). If you are deploying your own K8s cluster, this is also configurable by passing the --encryption-provider-config argument.

We can go one step further by encrypting K8s secrets with AWS KMS before they are even stored on the volumes on the disk level.

When secrets are created in the first place, it's pretty likely that we used K8s YAML files, but if we stored the secrets into YAML files, it's not really secure; after all, the values are merely base64 encoded, not encrypted. Anyone who gets access to the file could get the content of it.

It's a better practice if we don't store the content of those secrets in files at all, for example, by using kubernetes-external-secrets: it allows you to use external secret management systems, like AWS Secrets Manager or HashiCorp Vault, to add secrets in Kubernetes securely.

3 Authn and Authz

3.1 K8s User Types

Kubernetes clusters have two types of users:

- service accounts

- normal user accounts

3.2 Service Account Authn

Service accounts handle API requests on behalf of Pods.

The authn is typically managed automatically by K8s through the ServiceAccount Admission Controller using bearer tokens.

If you have ever SSHed into a container and poked around, you should probably already know that the bearer token is mounted into a pod as a file at an easy-to-find directory, and once it's leaked, it can be used from outside the cluster.

So accessing Pod Secrets should be restricted and should only be granted on a need-to-know basis (i.e. least privileged principle).

3.3 User Account Authn

In theory, for normal users and admin accounts, there is no automatic authn method for users. Admins of the cluster must add an authn method to implement authentication and authorization mechanisms.

This isn't really an issue for a more cloud-native setup since you probably are already using K8s as a service, like AWS EKS, which is already integrated with AWS IAM.

But if you choose to create the cluster yourself and own everything, Kubernetes assumes that a cluster-independent service manages user authn. The K8s doc lists several ways to implement authn, including client certificates, bearer tokens, authentication plugins, and other protocols. At least one user authentication method should be implemented if you are running the cluster on your own.

And when multiple authentication methods are implemented, the first module to successfully authenticate the request short-circuits the evaluation. Admins must make sure no weak methods such as static password file is used.

3.4 Anonymous Requests

When some requests are rejected by configured authn methods and are not tied to any user or Pod (service account), they are deemed anonymous requests.

In a server set up for token authentication with anonymous requests enabled, a request without a token present would also be performed as an anonymous request.

In Kubernetes 1.6 and newer, anonymous requests are enabled by default. When RBAC is enabled, anonymous requests require explicit authorization of the system:anonymous user or system:unauthenticated group. Anonymous requests should be disabled by passing the --anonymous-auth=false option to the API server.

3.5 RBAC and Principle of Least Privilege

RBAC and the principle of least privilege should always be used to limit the capabilities of admins, users, and service accounts.

3.6 AWS EKS Recommendations

What if you are already using K8s as a service, like AWS EKS, which probably already did the above things for you. Are there still things to worry about the topic of K8s authn/authz?

The answer is yes, and there are, in fact, quite a lot of things to get right, and here are a few:

3.6.1 Do Not Use a Service Account Token for Authentication

A service account token is a long-lived, static credential.

If it is compromised, lost, or stolen, an attacker may be able to perform all the actions associated with that token until the service account is deleted.

If you really need to grant access to K8s API from outside the cluster, like from an EC2 instance (for example running your CI/CD pipelines), it's better to use an instance profile and map it to a K8s RBAC role instead.

3.6.2 Employ Least Privileged Access to AWS Resources

An IAM user does not need to be assigned privileges to AWS resources to access the Kubernetes API.

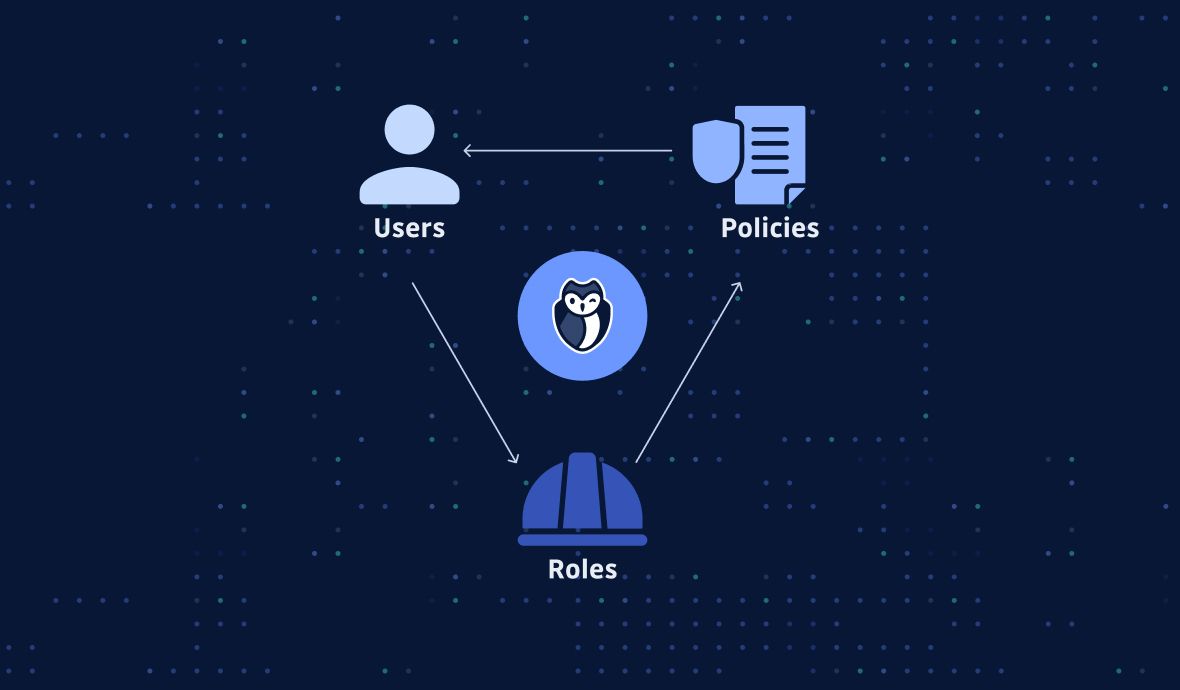

3.6.3 Use IAM Roles

When multiple users need identical access to the cluster, rather than creating an entry for each individual IAM User, allow those users to assume an IAM Role and map that role to a Kubernetes RBAC group.

This will be easier to maintain, especially as the number of users that require access grows.

Check my IAM security best practices here:

3.6.4 Private EKS Cluster Endpoint

By default, when you provision an EKS cluster, the API cluster endpoint is set to public, i.e. it can be accessed from the Internet.

But don't worry, because despite being accessible from the Internet, the endpoint is still considered quite secure because it requires all API requests to be authenticated by IAM first and then authorized by Kubernetes RBAC.

That said, there really are companies whose security policy mandates that you restrict access to the API from the Internet or prevents you from routing traffic outside the cluster VPC. In that case, you can make it private.

3.6.5 Create the Cluster with a Dedicated IAM Role

The IAM user or role used to create the cluster is automatically granted system:masters permissions in the cluster's RBAC configuration.

Therefore it is a good idea to create the cluster with a dedicated IAM role and regularly audit who can assume this role.

This role should not be used to perform routine actions on the cluster, and instead, additional users should be granted access to the cluster through other roles.

3.6.6 Regularly Audit Access to the Cluster

Who requires access is likely to change over time. Plan to periodically audit to see who has been granted access and the rights they've been assigned.

You can also use open source tooling like kubectl-who-can or rbac-lookup to examine the roles bound to a particular service account, user, or group.

Furthermore, you can even use automation tools to define the access as configuration code and apply it to grant access instead of manually. Plus, if the configuration is in code, it can also be audited.

3.6.7 Alternative Approaches

While IAM is the preferred way to authenticate users who need access to an EKS cluster, it is possible to use an OIDC identity provider such as GitHub using an authentication proxy and Kubernetes impersonation.

4 Logging and Auditing

Kubernetes auditing provides a security-relevant, chronological set of records documenting the sequence of actions in a cluster. The cluster audits the activities generated by users, applications that use the Kubernetes API, and the control plane itself.

Audit policy defines rules about what events should be recorded and what data they should include. See here for an example of an audit policy.

To enable the audit log, check out the official document here. If you are using a cloud service, like AWS EKS, it's far easier to do so. Basically, you need to create some CloudWatch log groups and get the setting values right in the EKS cluster. See AWS doc here for more details.

5 Summary

Here's a quick checklist for hardening your k8s clusters:

-

Pods (check the tutorial)

- non-root

- immutable root filesystem

- image scanning

- PSP

-

Network (check the tutorial)

- guard control plane nodes / etcd

- namespace separation

- network policies

- secrets management

-

Authn/Authz

- deny anonymous login

- secure and strong authentication

- RBAC with the least privilege principle

- pay particular attention if you are using K8s as a service regarding authn/authz

-

Auditing

- enabled

In the next article, we start the tutorial series with examples and code on how to secure Pods, putting these guidelines to practice in a real K8s cluster.

Be sure to subscribe and follow!

This article is a guest post. Views and opinions expressed in this publication are solely those of the author and do not reflect the official policy, position, or views of GitGuardian, The content is provided for informational purposes, GitGuardian assumes no responsibility for any errors, omissions, or outcomes resulting from the use of this information. Should you have any enquiry with regard to the content, please contact the author directly.